Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

An intro to analytics written by the editors of Game Analytics: Maximizing the Value of Player Data, a compendium of insights from more than 50 experts in industry and research, which covers the most relevant questions: what to track, and how to analyze the data.

May 30, 2013

Author: by Magy Seif El-Nasr

A reprint from the May 2013 issue of Gamasutra's sister publication Game Developer magazine, this article polls developers to find out about the challenges and opportunities around developing for Android in 2013. Purchase the May 2013 issue here.

The science of game analytics has gained a tremendous amount of attention in recent years. Introducing analytics into the game development cycle was driven by a need for better knowledge about the players, which benefits many divisions of a game company, including business, design, etc. Game analytics is, therefore, becoming an increasingly important area of business intelligence for the industry. Quantitative data obtained via telemetry, market reports, QA systems, benchmark tests, and numerous other sources all feed into business intelligence management, informing decision-making.

Two of the most important questions when integrating analytics into the development process are what to track, and how to analyze the data. The process of choosing what to collect is called feature selection. Feature selection is a challenge, perhaps especially when it comes to user behavior. There is no single right answer or standard model we can apply to decide what behaviors to track; there are instead several strategies that vary in goals: e.g., improve the user experience or increase monetization. In this article, we will attempt to outline some of the fundamental concerns in user-oriented game analytics, with feature selection as an overall theme. First, we'll walk through the types of trackable user data, and then introduce the feature selection process, where you select how and what to measure. Importantly, this article is not focused on F2P and online games -- analytics is useful for all games.

The three main sources of data for game analytics are:

Performance data: These are related to the performance of the technical- and software-based infrastructure behind a game, notably relevant for online or persistent games. Common performance metrics include the frame rate at which a game executes on a client hardware platform, or in the case of a game server, its stability.

Process data: These are related to the actual process of developing games. Game development is to a smaller or greater degree a creative process, but still requires monitoring, e.g., via task-size estimation and the use of burndown charts.

User data: By far the most common source of data, these are derived from the users who play our games. We view users either as customers (sources of revenue) or players, who behave in a particular way when interacting with games. The first perspective is used when calculating metrics related to revenue -- average revenue per user (ARPU), daily active users (DAU) -- or when performing analyses related to revenue (churn analysis, customer support performance analysis, or microtransaction analysis).

The second perspective is used for investigating how people interact with the actual game system and the components of it and with other players, by focusing on in-game behavior (average playtime, damage dealt per session, and so forth). This is the type of data we will focus on here. These three categories do not cover general business data, e.g., company value, company revenue, etc. We do not consider such data the specific domain of game analytics, but rather as falling within the general domain of business analytics.

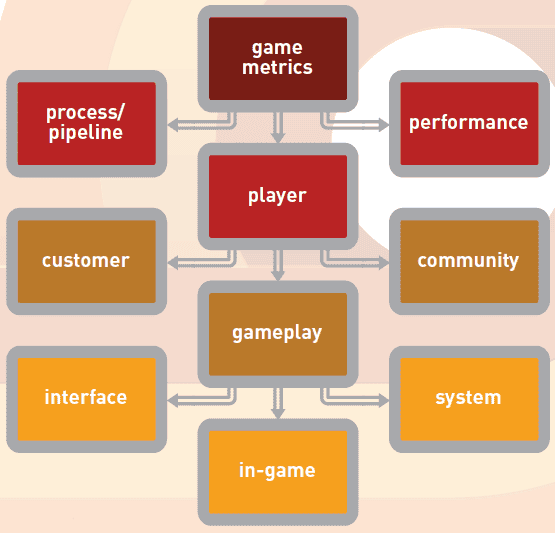

Figure 1: Hierarchical diagram of sources of data for game analytics emphasizing user metrics.

Many people have proposed different methods of classifying user data over the past few years. From a top-down perspective, a development-oriented classification system is useful, as it serves to funnel user metrics in the direction of three different classes of stakeholders -- for example, as follows.

Customer metrics: Covers all aspects of the user as a customer -- for example, cost of customer acquisition and retention. These types of metrics are notably interesting to professionals working with marketing and management of games and game development.

Community metrics: Covers the movements of the user community at all levels of resolution, such as forum activity. These types of metrics are useful to community managers.

Gameplay metrics: Any variable related to the actual behavior of the user as a player inside the game (object interaction, object trade, and navigation in the environment, for example). Gameplay metrics are the most important for evaluating game design and user experience, but are furthest from the traditional perspective of the revenue chain in game development, and hence are generally underprioritized. These metrics are useful to professionals working with design, user research, quality assurance, or any other position where the actual behavior of the users is of interest.

Customer metrics: As a customer, users can download and install a game, purchase any number of virtual items from in-game or out-of-game stores and shops, spending real or virtual currency, over shorter or longer timespans. At the same time, customers interact with customer service, submitting bug reports, requests for help, complaints, and so on. Users can also interact with forums, official or not, or other social-interaction platforms, from which information about these users, their play behavior, and their satisfaction with the game can be mined and analyzed. We can also collect information on customers' countries, IP addresses, and sometimes even age, gender, and email addresses. Combining this kind of demographic information with behavioral data can provide powerful insights into a game's customer base.

Community metrics: Users interact with each other if they have the opportunity. This interaction can be related to gameplay (combat or collaboration through game mechanics) or social (in-game chat). Player-player interaction can occur in-game or out-of-game, or some combination thereof -- for example, sending messages bragging about a new piece of equipment using a post-to-Facebook function. In-game, users can interact with each other via chat functions, out-of-game via live conversation (TeamSpeak or Skype), or via game forums.

These kinds of interactions between players form an important source of information, applicable in an array of contexts. For example, a social-network analysis of the user community in a F2P game can reveal players with strong social networks -- who are the players likely to help retain a big number of other players in the game by creating a good social environment (think guild leaders in MMORPGs). Likewise, mining chat logs and forum posts can provide information about problems in a game's design. For example, data-mining datasets derived from chat logs in an online game can reveal bugs or other problems. Monitoring and analyzing player-player interaction is important in all situations where there are multiple players, but especially in games that attempt to create and support a persistent player community, and which have adopted an online business model, which includes many social online games and F2P games. These examples are just the tip of a very deep iceberg, and the collection, analysis, and reporting on game metrics derived from player-player interaction is a topic that could easily take up several volumes.

Gameplay metrics: This subcategory of the user metrics is perhaps the most widely logged and utilized type of game telemetry currently in use. Gameplay metrics are measures of player behavior: navigation, item and ability use, jumping, trading, running, and whatever else players actually do inside the virtual environment of a game (whether 2D or 3D). Four types of information can be logged whenever a player does something or something happens to a player in a game: What is happening? Where is it happening? At what time is it happening? And: Who is involved?

Gameplay metrics are particularly useful for informing game design. They provide the opportunity to address key questions, including whether any game world areas are over- or underused, if players utilize game features as intended, and whether there are any barriers hindering player progression. These kind of game metrics can be recorded during all phases of game development, as well as following launch.

Players can generate thousands of behavioral measures over the course of a single game session -- every time a player inputs something to the game system, it has to react and respond. Accurate measures of player activity can include dozens of actions being measured per second. Consider, for example, players in a typical fantasy MMORPG like World of Warcraft: Measuring user behavior could involve logging the position of the player's character, its current health, mana, stamina, the time of any buffs affecting it, the active action (running, swinging an axe), the mode (in combat, trading, traveling), the attitude of any NPC enemies toward the player, the player character name, race, level, equipment, currency, and so on -- all these bits of information simply flow from the installed game client to the collection servers.

From a practical perspective, you may want to further subdivide gameplay metrics into the following three categories (in order to make your metrics more searchable, for instance):

In-game: Covers all in-game actions and behaviors of players, including navigation, economic behavior, as well as interaction with game assets such as objects and entities. This category will in most cases form the bulk of collected user telemetry.

Interface: Includes all interactions the player performs with the game interface and menus. This includes setting game variables, such as mouse sensitivity and monitor brightness.

System: System metrics cover the actions game engines and their subsystems (AI system, automated events, MOB/NPC actions, and so on) initiate to respond to player actions. For example, a MOB attacking a player character if it moves within aggro range, or progressing the player to the next level upon satisfaction of a predefined set of conditions.

To sum up, the array of potential measures from the users of a game (or game service) can be staggering, and generally we should aim for logging and analyzing the most essential information. This selection process imposes a bias, but is often necessary to avoid data overload and to ensure a functional workflow in analytics.

Bias is introduced in the dataset both by the selection of the features to be monitored and also by the measuring strategies adopted, and that happens to a large degree when analysts work in a vacuum. If those responsible for analytics cannot communicate with all relevant stakeholders, critical information will invariably end up missing and the full value of analytics will not be realized.

Analytics groups are placed differently across companies due to analytics arriving to the industry from different directions, notably user research, marketing, and monetization, and this can lead to a situation where the analytics team only services or prioritizes their parent department. Having a strong lateral integration -- making sure that the analytics team communicates with all the teams, for example -- helps to avoid this issue. This also helps alleviate the common problem that the analytics teams, without having sufficient access to design teams, are forced to self-select features to track and analyze, without having the proper grounding in the design of the game and its monetization model.

Even for a small developer with a part-time analyst this can be a problem. Another typical problem is that the decision about which behaviors to track is made without involving the analytics team. This can lead to a lot of extra time spent later on trying to work with data that are not exactly what is needed, or needing to record additional datasets. Good communication between teams also helps alleviate friction between analytics and design.

Importantly, analytics should be integrated from the onset of a production -- all the way back in the early design phases. Early on it should be planned what kinds of behavior that should be tracked and with what types of frequencies. This allows for optimal planning of how to ensure value from analytics to design, monetization, marketing, etc. Analytics should never be slapped on sometime after the beta. In this way analytics is similar to other tools like user research, in that it ideally is embedded throughout the development processes, and after launch.

Knowing that there is an array of things we can measure about user behavior, how do we then select among them? And do we really have to make choices here? Sadly, yes. In real life, we rarely have the resources to track and analyze all possible user behaviors, which means we have to develop an approach to analytics that considers cost-benefit relationships between the resources required for tracking, storing, and analyzing user telemetry/metrics on one hand, and the value of the insights obtained on the other. It is also important to be aware that the analyses needed during different stages of production and post-launch varies. For example, during the latter phases of development, tuning design is vital, but many metrics related to monetization cannot be calculated because purchases have not been made by the target audience yet.

We will discuss this in more detail below, but in short, by following this line of reasoning, the minimum set of user attributes that should be tracked, stored, and analyzed should include considerations as to the following:

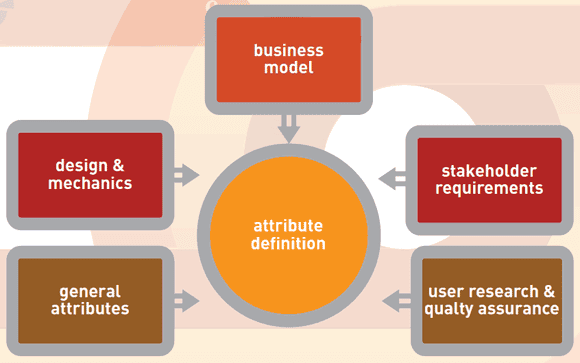

1) General attributes: The attributes that are shared for users (as customers and players) across all games. These form the core metrics that can always be collected, for any computer game -- for example, the time at which a user starts or stops playing, a user ID, user IP, entry point, and so on. These form the core of any game analytics dataset.

2) Core mechanics/design attributes: The essential attributes related to the core of the gameplay and mechanics of the game. (For example, attributes related to time spent playing, virtual currency spent, number of opponents killed, and so on.) Defining the core design attributes should be based directly on the key gameplay mechanics of the game, and should provide information that lets designers make inferences about the user experience (whether players are progressing as planned, if flow is sustained, death ratios, level completions, point scores).

3) Core business attributes: The essential attributes related to the core of the business model of the company, for example, logging every time a user purchases a virtual item (and what that item is), establishes a friend connection in-game, or recommends the game to a Facebook friend -- or any other attributes related to revenue, retention, virality, and churn. For a mobile game, geolocation data can be very interesting to assist target marketing. In a traditional retail situation, none of these are of interest, of course.

4) Stakeholder requirements: In addition, there can be an assortment of stakeholder requirements that need to be considered. For example, management or marketing may place a high value on knowing the number of Daily Active Users (DAU). Such requirements may or may not align with the categories mentioned above.

5) QA and user research: Finally, if there is any interest in using telemetry data for user research/user testing and quality assurance (recording crashes and crash causes, hardware configuration of client systems, and notable game settings), it may be necessary to augment to attributes on the list of features accordingly.

When building the initial attribute set and planning the metrics that can be derived from them, you need to make sure that the selection process is as well informed as possible, and includes all the involved stakeholders. This minimizes the need to go back to the code and embed additional hooks at a later time -- which is a waste that can be eliminated with careful planning.

That being said, as the game evolves during production as well as following launch (whether a persistent game or through DLCs/patches), it will typically be necessary to some degree to embed new hooks in the code in order to track new attributes and thus sustain an evolving analytics practice. Sampling is another key consideration. It may not be necessary to track every time someone fires a gun, but only 1 percent of these. Sampling is a big issue in its own right, and we will therefore not delve further on this subject here, apart from noting that sampling can be an efficient way to cut resource requirements for game analytics.

Figure 2: The drivers of attribute selection for user behavior attributes. Given the broad scope of application of game analytics, a number of sources of requirements exist.

One important factor to consider during the feature selection process is the extent to which your attribute set selection can be driven by pre-planning, by defining the game metrics and analysis results (and thereby the actionable insights) we wish to obtain from user telemetry and select attributes accordingly.

Reducing complexity is necessary, but as you restrict the scope of the data-gathering process, you run the risk of missing important patterns in user behavior that cannot be detected using the preselected attributes. This problem is exasperated in situations where the game metrics and analyses are also predefined -- for example, relying on a set of Key Performance Indicators (such as DAU, MAU, ARPU, LTV, etc.) can eliminate your chance of finding any patterns in the behavioral data not detectable via the predefined metrics and analyses. In general, striking a balance between the two situations is the best solution, depending on available analytics resource. For example, focusing exclusively on KPIs will not tell you about in-game behavior, e.g., why 35 percent of the players drop out on level 8 -- for that we need to look at metrics related to design and performance.

It is worth noting that when it comes to user analytics, we are working with human behavior, which is notoriously unpredictable. This means that predicting user analytics requirements can be challenging. This emphasizes the need for the use of both explorative (we look at the user data to see what patterns they contain) and hypothesis-driven methods (we know what we want to measure and know the possible results, not just which one is correct).

During gameplay, a user creates a continual loop of actions and responses that keep the game state changing. This means that at any given moment, there can be many features of user behavior that change value. A first step toward isolating which features to employ during the analytical process could be a comprehensive and detailed list of all possible interactions between the game and its players. Designers are extremely knowledgeable about all possible interactions between the game and players; it's beneficial to harness that knowledge and involve designers from the beginning by asking them to compile such lists.

Secondly, considering the sheer number of variables involved even in the simplest game, it is necessary to reduce the complexity through a knowledge-driven factor reduction: Designers can easily identify isomorphic interactions. These are groups of similar interactions, behaviors, and state changes that are essentially similar even if formally slightly different. For example "restoring 5 HP with a bandage" or "healing 50 HP with a potion" are formally different but essentially similar behaviors. The isomorphic interactions are then grouped into larger domains. Lastly, it's required to identify measures that capture all isomorphic interactions belonging to each domain. For example, for the domain "healing," it's not necessary to track the number of potions and bandages used, but just record every state change to the variable "health."

These domains have not been derived through objective factor reduction; there is a clear interpretive bias any time humans are asked to group elements in categories, even if designers have exhaustive expert knowledge. These larger domains can potentially contain all the possible behaviors that players can express in a game and at the same time help select which game variables should be monitored, and how.

Machine learning is a field of study that gives computers the ability to learn without being explicitly programmed. More than an alternative to designer-driven strategies, automated feature selection is a complementary approach to reducing the complexity of the hundreds of state changes generated by player-game interactions. Traditionally, automated approaches are applied to existing datasets, relational databases, or data warehouses, meaning that the process of analyzing game systems, defining variables, and establishing measures for such variables, falls outside of the scope of automated strategies; humans already have defined which variables to track and how. Therefore, automated approaches individuate only the most relevant and the most discriminating features out of all the variables monitored.

Automated feature selection relies on algorithms to search the attribute space and drop features that are highly correlated to others; algorithms can range from simple to complex. Methods include approaches such as clustering, classification, prediction, and sequence mining. These can be applied to find the most relevant features, since the presence of features that are not relevant for the definition of types affects the similarity measure, degrading the quality of the clusters found by the algorithm.

In a situation with infinite resources, it is possible to track, store, and analyze every user-initiated action -- all the server-side system information, every fraction of a move of an avatar, every purchase, every chat message, every button press, even every keystroke. Doing so will likely cause bandwidth issues, and will require substantial resources to add the message hooks into the game code, but in theory, this brute-force approach to game analytics is possible.

However, it leads to very large datasets, which in turn leads to huge resource requirements in order to transform and analyze them. For example, tracking weapon type, weapon modifications, range, damage, target, kills, player and target positions, bullet trajectory, and so on, will enable a very in-depth analysis of weapon use in an FPS. However, the key metrics to evaluate weapon balancing could just be range, damage done, and the frequency of use of each weapon. Adding a number of additional variables/features may not add any new relevant insights, or may even add noise or confusion to the analysis. Similarly, it may not be necessary to log behavioral telemetry from all players of a game, but only a percentage (this is of course not the case when it comes to sales records, because you will need to track all revenue).

In general, if selected correctly, the first variables/features that are tracked, collected, and analyzed will provide a lot of insight into user behavior. As more and more detailed aspects of user behavior are tracked, costs of storage, processing, and analysis increase, but the rate of added value from the information contained in the telemetry data diminishes.

What this means is that there is a cost-benefit relationship in game telemetry, which basically describes a simplified theory of diminishing returns: Increasing the amount of one source of data in an analysis process will yield a lower per-unit return.

A classic example in economic literature is adding fertilizer to a field. In an unbalanced system (underfertilized), adding fertilizer will increase the crop size, but after a certain point this increase diminishes, stops, and may even reduce the crop size. Adding fertilizer to an already-balanced system does not increase crop size, or may reduce it.

Fundamentally, game analytics follow a similar principle. An analysis can be optimized up to a specific point given a particular set of input features/variables, before additional (new) features are necessary. Additionally, increasing the amount of data into an analysis process may reduce the return, or in extreme cases lead to a situation of negative return due to noise and confusion added by the additional data. There can of course be exceptions -- for example, the cause of a problematic behavioral pattern, which decreases retention in a social online game, can rest in a single small design flaw, which can be hard to identify if the specific behavioral variables related to the flaw are not tracked.

User-oriented game analytics typically have a variety of purposes, but we can broadly divide them into the following:

Strategic analytics, which target the global view on how a game should evolve based on analysis of user behavior and the business model.

Tactical analytics, which aim to inform game design at the short-term, for example an A/B test of a new game feature.

Operational analytics, which target analysis and evaluation of the immediate, current situation in the game. For example, informing what changes you should make to a persistent game to match user behavior in real-time.

To an extent, operational and tactical analytics inform technical and infrastructure issues, whereas strategic analytics focuses on merging user telemetry data with other user data and/or market research.

When you're plotting a strategy for approaching your user telemetry, the first factors you should concern yourself with are the existence of these three types of user-oriented game analytics, the kinds of input data they require, and what you need to do to ensure that all three are performed, and the resulting data reported to the relevant stakeholder.

The second factor to consider is to clarify how to satisfy both the needs of the company and the needs of the users. The fundamental goal of game design is to create games that provide a good user experience. However, the fundamental goal of running a game development company is to make money (at least from the perspective of the investors). Ensuring that the analytics process generates output supporting decision-making toward both of these goals is vital. Essentially, the underlying drivers for game analytics are twofold: 1) ensuring a quality user experience, in order to acquire and retain customers; 2) ensuring that the monetization cycle generates revenue -- irrespective of the business model in question. User-oriented game analytics should inform both design and monetization at the same time. This approach is exemplified by companies that have been successful in the F2P marketplace who use analysis methods like A/B testing to evaluate whether a specific design change increases both user experience (retention is sometimes used as a proxy) and monetization.

Up to this point, the discussion about feature selection has been at a somewhat abstract level, attempting to generate categories guiding selection, ensuring comprehensiveness in coverage rather than generating lists of concrete metrics (shots fired/minute per weapon, kill/death ratio, jump success ratio). This because it is nigh-on impossible to develop generic guidelines for metrics across all types of games and usage situations. This not just because games do not fall within neat design classes (games share a vast design space and do not cluster at specific areas of it), but also because the rate of innovation in design is high, which would rapidly render recommendations invalid. Therefore, the best advice we can give on user analytics is to develop models from the top down, so you can ensure comprehensive coverage in data collection, and from the core out, starting from the main mechanics driving the user experience (for helping designers) and monetization (for helping making sure designers get paid). Additional detail can be added as resources permit. Finally, try to keep your decisions and process fluent and adaptable; it's necessary in an industry as competitive and exciting as ours.

Magy Seif El-Nasr, Anders Drachen, and Alessandro Canossa are the editors of Game Analytics: Maximizing the Value of Player Data, a recently published compendium of insights from more than 50 experts in industry and research. This article is based on selected content from the book.

Read more about:

FeaturesYou May Also Like