Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

Integrating biofeedback into games is finally accessible for developers and players alike. This post highlights the state of biofeedback tech in games as of mid-2016 and presents options for how developers can start exploring it for their own titles.

A few years ago, I wrote a Gamasutra blog post about biofeedback in games. You can read that here and, in the interest of getting to “the good stuff” sooner than later, I’ll try to avoid repeating a lot of the details I discuss there. While much of what I’ve written in “Quit Playing Games with My Heart: Biofeedback in Gaming” remains just as true today as it did back in 2013, a lot of exciting developments on this front have happened since then. As such, I wanted to revisit that piece and discuss some of the new possibilities that have developed since then and, as a player and believer in this technology, make another plea for other developers to consider this technology and incorporate it in their own games.

The ability to integrate biofeedback into your game is now incredibly accessible. Any developer, any game - regardless of genre, size, goals, how overt you’d want the biofeedback responses to be, etc. - can benefit from the tech available today. So, check out things like the Intel® RealSense™ camera SDK (SR300) or the Affectiva Affdex SDK. The technology is out there, easy to integrate, and it now truly is up to us as developers to harness the amazing opportunity it offers to make our games more personal, responsive, and engaging than we’ve had the ability to do so previously.

Also, as a disclaimer, if at any point I sound like an advertisement for any one technology, I apologize. The truth of the matter is that if we (the Nevermind team and I) didn’t think a piece of tech was worthwhile, we wouldn’t be working with it in the first place. My goal here is to present a few specific technologies that are currently available to developers (especially Unity developers like us) in the effort to help others find a good starting point for integrating biofeedback into their own games.

For those of you who aren’t familiar with me or the previous piece I wrote (don’t worry if you’re not, I still think you’re cool), I’m the creator of a game called Nevermind. Nevermind is a biofeedback-enhanced psychological thriller game that responds to indications of the player’s near-real-time feelings of stress, fear, and/or anxiety while playing. It started as my MFA thesis project at the University of Southern California back in 2012, and the earlier Gamasutra piece I wrote came about a year after that version of the game (effectively, just a proof of concept) was completed.

A lot has happened since then!

We’ve rebuilt Nevermind from the ground up and expanded it into a full game now available for Windows/Mac on Steam! You can learn more about it and download it here. I’ll also take a moment to mention that you can now experience the biofeedback functionality with nothing more than the game and a standard webcam thanks to Affectiva’s new Unity plug-in, if you’re curious. More on the technology behind that below.

.png/?width=646&auto=webp&quality=80&disable=upscale)

Biofeedback in games is essentially the ability for a game to have a subconscious two-way “conversation” with a player via a sensory input device (such as a heart rate sensor, emotion responsive technology, or a neurofeedback instrument). Generally how it works is that the game provides a certain experience for the player, the player reacts to that experience subconsciously (e.g. via physiological or emotional responses), the sensor picks up on those reactions, and the game responds by adjusting the experience accordingly.

For example, in Nevermind, if any of its supported technology picks up signals that the player might be feeling scared, stressed, or anxious, the game responds by becoming more difficult. For instance, the screen will become more static-y (obscuring vision and essentially making puzzles harder to solve) and/or environmental obstacles, like milk flooding the room, may appear. We choose to use biofeedback in this way in Nevermind to help encourage players to become more mindful of their feelings of stress and anxiety and learn to manage those sensations of stress on the fly. However, this is just one of many potential ways that biofeedback can be integrated into a gameplay experience.

Nevermind has historically determined when the player might be feeling scared or anxious via the player’s heart rate - from which we use our proprietary algorithm to deduce Heart Rate Variability (HRV), a biometric indicator that correlates well to a player’s psychological arousal levels. Now, that may not mean exactly what you think it does. As explained a bit more thoroughly in the previous article, “Arousal can be thought of as the intensity of a feeling – it’s the scale of “meh” to ‘OMG’.” It’s essentially how “amped up” a person is. High arousal can be positive or negative - elated or furious, excited or stressed, etc. Valence, arousal’s counterpart, determines the quality of the emotion while, in a loose sense, arousal determines the quantity. More on valence in a bit.

Physiological biofeedback, commonly based on internal signals like heart rate, is great because it’s hard to “game” the system given that it provides insight into the “raw” data of the body’s response to a given experience. Getting that near-direct input of whether a player is amped up or mellow during any given part of a game is hugely valuable. It can offer an indication of whether players are bored, excited, frustrated, calm, etc. and can be used as a way for a game to adjust as needed through, for example, something like dynamic difficulty.

Furthermore, integrating physiological biofeedback like heart rate detection into games is even easier now than it was when I described it a few years ago. As many know, I’m a big fan of Intel’s RealSense technology which, amongst many other things, can read heart rate through the camera itself - without any wearables. The RealSense SDK for Unity is out there and available for devs - giving almost any developer instant access to the ability to seamlessly read heart rate. Of course, there are many other great HR sensors that devs can readily work with and are commercially available as well - the Apple Watch, ANT+ enabled sensors, Bluetooth sensors that use the Heart Rate profile, etc.

Intel® RealSense™ Camera Technology

Intel® RealSense™ Camera Technology

Being able to measure arousal through physiological heart rate sensors was a great fit for Nevermind since, given that it is a thriller/horror game, it could be assumed that most instances of elevated arousal are an indication that the player is feeling a negative form of arousal in the form of fear or tension.

However, we’ve observed a few areas where false positives would potentially be picked up. The example I use in the previous piece (which is still an issue today when played only with physiological biofeedback sensor), takes place in a particularly challenging maze in Nevermind. When observing players reach the end of the maze (in a playtest or Let’s Play video), we've seen that there’s often a spike in the biofeedback response (typically indication of the presence of fear or stress) near the end of the maze - even though there is nothing particularly threatening happening at that point in time. What we have come to find is that, in this instance, the player is often actually thrilled to be at the end of the maze and the physiological sensor is picking up on that excitement (high arousal...but with a positive valence). If only there was something we could do about having better insight into the player’s valence at any given moment...

In my earlier article, I conclude a section with “This future is a bright and exciting one indeed. However, until valence detection is refined and paired with arousal sensors, we’ll just have to do the best we can with the arousal detecting sensors that are currently out there.”

Well, as of just a few months ago, valence detection is now available to us and, having worked with it for the past several months, I’m a believer. Valence detection, or perhaps better described as emotion recognition, can determine the quality of what a player might feel - positive, negative, happy, sad, etc. It can even presume arousal levels to an extent. Unlike physiological biofeedback, which gets a somewhat direct signal about the body’s reaction, emotion-based biofeedback as it is available now can sense how the player’s reaction manifests as an outward response through changes in facial expressions.

When I first started Nevermind back in 2011, emotion recognition technology based on facial expressions was an exciting concept and undergoing extensive exploration in the academic and research realm. However, at that time, the tech simply wasn’t good enough to be used out in the wild for the purposes of gaming. Now, several years later, Affectiva, a group that has been focused on this technology for a long time, recently released a Unity plug-in that makes their emotion recognition software, Affdex, available for developers. We’ve since integrated it into Nevermind and I can attest that the technology is incredible.

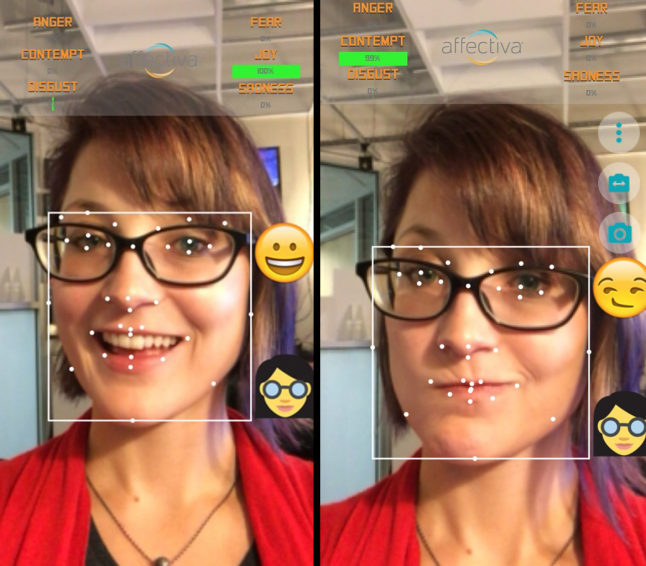

Having fun with Affectiva's AffdexMe app (iOS, Android) - illustrating just a small handful of what the Affdex plug-in is capable of.

With any webcam (and I’ll get to the significance of that shortly), the Affdex software can read tons of nuances in facial movements - getting data on everything from specific emotional responses (such as happiness or even contempt) to the facial movement itself (such as a slight eyebrow raise).

One of the biggest barriers to entry in getting the “full experience” of Nevermind into players’ hands is the previous need for specialized technology (i.e. a heart rate sensor) to enable biofeedback detection. We continue to support a number of fantastic sensors with the hope that players can use a device that they already own or, if they buy one, will be able to use it for purposes outside of Nevermind. Nonetheless, the need (or optional need) for any peripheral always presents a challenge for any game. What’s really wonderful about Affectiva’s new plugin is that it enables the emotion-based biofeedback to be available to any player who has a basic webcam - which, for most Windows/Mac players, is often a given.

Integrating emotion-based biofeedback into Nevermind was an interesting challenge at first since these indications are not a direct one-to-one correlation to the physiological data we had traditionally derived from Heart Rate and Heart Rate Variability. Our original plan was to simply hook up Affdex’s “fear” metric as a stand-in for our HRV-based fear metric - and found, after several tests, that it didn’t feel the same. After many weeks of exploration, tests, and iteration, we realized that, because Nevermind is more of a mystery game built on a sense of growing dread rather than jump scares and shock-imagery, using subtle inputs such as “disgust” were more successful. What we ended up with was a biofeedback experience that feels similar whether you play with one of our physiological sensors (i.e. heart rate sensors) or just the webcam alone.

People often ask me, which one is better. To be honest, they’re both fantastic - they’re just different. The technologies each read different signals and, as such, emotion-based biofeedback may be "better" in some instances than physiological biofeedback and vice versa. Of course, the ideal scenario is combining the two - pairing physiological biofeedback input with emotional biofeedback input for indications of both arousal and valence, giving the game the full spectrum of what the player may be feeling. With Nevermind, this can be accessed by playing with both Affdex and a Heart Rate sensor and, we’ve found, the result is a very responsive-feeling experience with fewer false positives. However, the surface of what can be accomplished with physiological, emotion-based, or both biofeedback technologies has only been scratched just a bit. We’re having a blast geeking out over it, but we’re a tiny studio who can do only so much and we want to see what vastly different experiences other folks can create with it.

Admittedly, Nevermind goes for the “low hanging fruit” when it comes to leveraging technology like this. Using horrific imagery to affect the player isn’t, in and of itself, the most unexpected approach.

There are so many ways that biofeedback - physiological and emotional - can manifest in games (both overtly and subtly). This is something I think and read about a lot. As such, I want to share a few ideas here with the hope that it inspires you, fellow awesome developer, to give it a whirl in your own game:

Dynamic Difficulty - The game becomes gets easier as it detects that the player is becoming more frustrated. The game becomes more difficult as it detects that the player is becoming bored. Unwanted grinding is reduced and player engagement is, hopefully, increased.

Analytics - Knowing how the player is responding to parts of your game can be invaluable to making the experience better (this isn’t new info). However, getting a sense of what’s “going on under the hood” as the player responds can be very valuable, unfiltered feedback that can be used to better tune the experience, either specific to that user on the fly or in aggregate via content and tuning updates.

Customized Experience - Knowing how a player is feeling when he/she sits down at your game can open up all kinds of opportunities to create an experience to fit the mood. Is your player feeling particularly tense? Maybe you want to calm him/her before starting the core experience. Is the player feeling happy? Sad? Maybe NPCs can reflect and/or empathize with him/her.

The possibilities are endless with emotion-responsive games

I could write a thousand pages or more on this if left to my own devices, but have tried to keep this article as brief as I can.

The essence is that biofeedback in gaming is only getting better, more accessible, and more interesting as time goes on. However, it’s never going to reach its true potential unless creative and clever developers do creative and clever things with it. As a studio focused on working with cool, emerging technology, we really love experimenting with it - but we alone can’t realize all the possibilities it offers.

Whether you’re an indie studio, mobile games maker, student, AAA studio VP of technology, etc, this is technology that will benefit and change the face of games of all shapes and sizes and I can’t wait to see what amazing things other developers, like you, will do with it!

Read more about:

Featured BlogsYou May Also Like