Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

This two-part blog post outlines our experiences with the chaotic self-organizing system, that drives the core simulation of our game “Let's Grow!”, while also offering up some thoughts on what systems like this has to offer both developers and end-users.

A short intro

In this two-part blog post, I wanted to share some of our experiences with the chaotic self-organizing system, that drives the core simulation of our Unity based game “Let's Grow!” (currently iPad only), while also offering up a few thoughts on what systems like this has to offer both game developers and end-users. This first part is going to be rather techy, but for those not-so-techy out there, I promise to make Part 2 somewhat lighter.

Now, about 18 months ago we got the funding for pre-production of “Let's Grow!”, based on a prototype and concept pitch created by myself and one of our prototype developers, Rasmus Heeger. Our first prototype was merely an easy-to-use 3D modeler, where the player could draw plant stems, place leaves and flowers and cut it all off again. It was relatively simple, but quite fun and seemed to offer an immediate satisfaction and a kind of zen to anyone who tried it. It definitely seemed that there was something there...

Screens from the first prototype

Screens from the first prototype

However in the concept document, we had aimed far beyond the prototype. We wanted the plants to feel alive and give the player the experience and creative challenge of working with plants that feel alive, not just dead 3D models. In the final pitch document, I outlined our ambition like this:

The aim of this project is to create an interactive creative experience, focussing on creativity, creation and immersion, rather than progress and winning. In the application the user will be allowed to create a plant from seed into fully grown tree – with the aim of offering the user the most realistic, unhindered creative experience of working with a living thing.

When the funding actually came through, this meant that we had to figure out how to actually make this come true – how to put nature in a box.

Our Approach

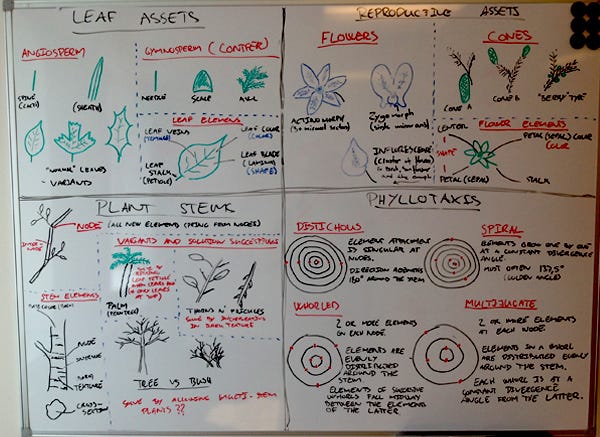

While we iterated on the interface, setting, art style and storyboarded the full app, we started doing research and design on what we ended up referring to as our natural growth system. Trying to work smarter, rather than harder – we started out by reading numerous articles on plant modeling, primarily in regards to biological research, focussing on L-systems and self-organization. We soon chose to go with a self-organizing approach, as these systems seemed to result in the most realistic and varied plants, compared to the L-system generated plants, which seemed to be more ideal plants. At the same time, we did a great deal of research into the science of real plants, in order to get an overview of how real plants look and work – which is surprisingly systematic and interesting! (Sometimes a game designer's work takes you to strange and wonderful places).

My whiteboard, while doing our initial research

Over a few months this resulted in our first natural growth prototype, which was later expanded into the system currently employed in the app.

The Natural Growth System

Essentially our natural growth system is a self-organizing system, that allows plants to construct themselves through repeated applications of relatively simple processes. By changing numerous (100+) plant variables in what we refer to as the plant genome, we can change the appearance and growth tendencies of the plant – the potential of the plant – and thus in reality create different plant species. Then, by making minute random changes in the initial state of the growth environment, we can guarantee that each instantiation of a given species is unique – every time. The following is an outline of how we built our system, but if you are interested in more theory on plant modeling, I can warmly recommend this database http://algorithmicbotany.org/papers/

The Growth Environment

Two core elements characterize the growth environment – a cloud of attraction points (which is essentially points in 3D space, that the plant wants to occupy) and a shadow voxel space that defines shadow values for points in space and allows parts of the plant to cast shadows on other parts, locally inhibiting growth, as plant parts in shadow will get less light and thus gather fewer resources. In broad terms, the attractor cloud exists to guide growth direction, whereas the shadow voxels are key to distributing growth resources and triggering growth.

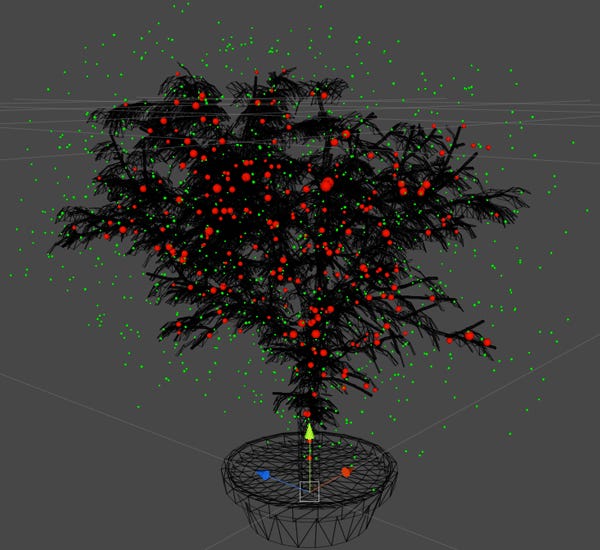

The Attractor Cloud

Across the 3D growth environment, a number of attraction points (app. 7000 in the iPad version) are distributed with an even density, but each point with a minute random offset from its ideal position. This cloud of attractors defines the boundaries of the natural growth space and by moulding the shape of the cloud, we are able to heavily direct the growth direction of the plants. To such a degree in fact, that restricting the cloud to cover a surface, should essentially cause the plants to climb the surface, as one sees with ivy and other plants.

The attractor cloud

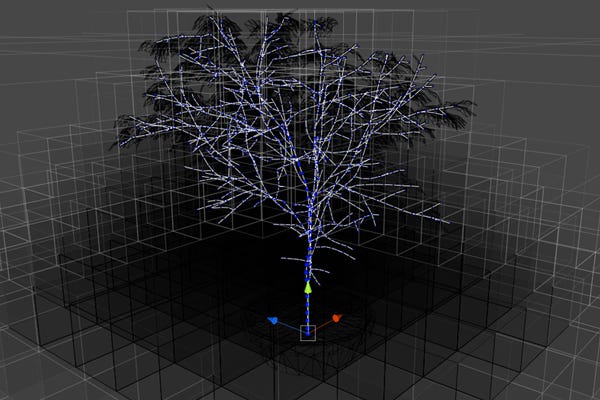

The Shadow Voxels

The shadow voxel space is used to imitate the fact that the plant (and potentially other objects in the environment) casts shadows, thus inhibiting growth in areas with little light and sometimes even triggering self pruning, where plant parts wither and fall off. Basically each part of the plant has a defined shadow value that it casts on the voxel it occupies, as well as in all other voxels in a pyramid shape below, with a diminishing effect the on each subsequent voxel. This calculation is carried out for each shadow casting element, resulting in a 3D shadow map, which is employed when growth resources are distributed.

Voxel space - with voxels graded to represent shadow voxels

The Growth Cycle

The system that handles growth revolves around two main questions – “what to grow?” and “where to grow?”. Therefore, we needed one system that defines which parts of the plant will grow and which will not (resource allocation) and another system which defines where prioritized growth will actually grow into 3D space. Both these systems feature strong feedback loops, where the plants current state, together with the environment, defines what will become the next actualization and so on.

Now, a stem consists of nodes connected by internodes – the nodes being where leaves, flowers, cones and branches sprout. This is actually quite clear if you look at a plant – try checking out the nearest potted plant. Mimicking this behavior, we placed a number of buds at each node, distributing them after known phyllotaxis patterns (patterns in which leaves are arranged on a plant stem) and to our great surprise, we found that it took only four different Phyllotaxis patterns to mimic most real life plants. In our virtual plants, all new growth (be it leaves, flowers or stem) sprout from these buds.

A medium sized tree (Red Jaywood)

Each plant starts off being a seed in the ground, which is essentially a single terminal bud and over time this may grow into a complex 3D structure consisting of thousands of stem segments, buds, leaves and flowers. As an example a medium sized tree (like the one above) has been grown in an environment consisting of approximately 7000 attraction points within 1000 voxels and consists of roughly 1000 leaves/flowers, 2000 stem segments and 3000 buds.

Where to grow? - Resource allocation

When the plant has to figure out where to grow, it starts by sorting the buds into those able to grow and those not (aborted, already grown, hibernating etc.). Then it continues by registering the shadow value for each individual bud, based on the state of the shadow voxels. This information then feeds down through the plants structure, which often consists of several generations of branches. The information flows together as branches converge towards the roots, resulting in growth potential sums at each branching until finally adding up to a base growth potential at the roots.

This base growth potential defines the base growth resources, which are then redistributed back through the branched structure of the plant. However to achieve the greatest possible plant diversity, rather than distributing the resources on a 1:1 in relations to growth potential, the resource distribution is modified by numerous genome based parameters that shifts the growth in various ways, such as:

Apical control parameters, which shift the growth priority between lateral and terminal growth (Relative to the plant, not the environment)

Gravimorphism parameters, which shift the growth priority between horizontal and vertical growth (Relative to the environment, not the plant)

Proleptic Sleep parameters, which imposes a temporal growth latency to new buds.

Once these parameters have been applied to modify the resource flow back towards the buds, each bud receives an amount of growth resource. If this amount exceeds a minimum threshold, the bud is set to be able to grow. What, where and whether it will grow is decided in the next step.

Where to grow from there? - Producing new growth

Once a bud is set to grow, the natural growth system decides if it will grow into a flower, leaf or new stem segment. If the choice lands on a flower or leaf, it's easy. This is simply placed according the parameters governing leaf and flower placement at the relevant bud. It is when the choice lands on creating stem segments that the fun begins.

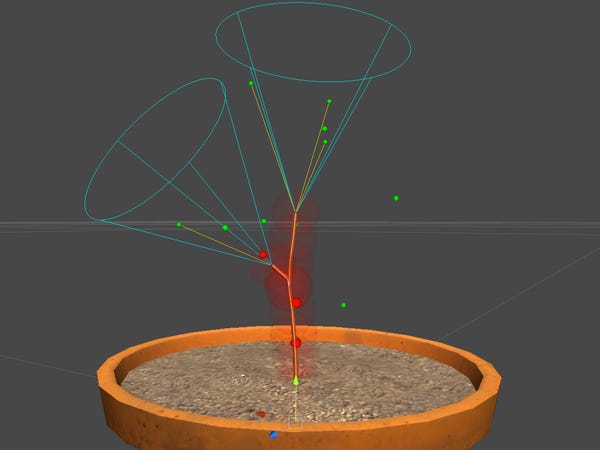

Figuring out where to grow

When growing a stem segment, the bud checks the surrounding environment to see if there is room to grow, doing so within a cone, defined by range and angle variables, set by the plant genome. If and only if there is a one or more active attraction points within the cone, will the bud grow. If the search is positive, the system produces a growth vector, normalized between all the “bud-to-active-attraction-point” vectors inside the perception cone. This initial growth vector is then modified by several growth parameters set for the specific plant species in the genome, such as a tropism parameters which will promote/hinder branches from sagging/striving upwards.

Once the final growth vector is established the branch grows one stem segment, before producing a new node and with it new buds. This new node has a kill sphere (its radius defined by the plant genome) within which it deactivates all attraction points, effectively signifying that it has taken up this space. Perhaps the stem has enough resources to keep growing (or will be given more resources during the next growth phase), which would result in a “rinse and repeat” to create more leaves, flower or stems – however in an environment with slightly less room and slightly altered shadow values due to the new growth.

So, how much variation can this system create?

First off, when a player plants a new plant, he or she starts out by selecting a seed to define which species the plant belongs to. There are currently 27 species in “Let's Grow!”, but we could create an astronomically large number of variant species. Each species is defined by a plant genome, which we built in our genome editor tool. These genomes are built from more than a hundred variables, which define everything from the color of the leaf vein patters to the plants high-level branching tendencies. Every part of the plant is determined by these variables and everything – apart from the bark texture – is created procedurally based on these informations.

Once a seed has been planted, the initial state of the system is set. The 100+ growth variables of the plant genome have been instantiated, together with a new instance of the 3D growth environment where the attraction points have been distributed. Given the virtually infinite number of ways to distribute 7000 attraction points in a defined 3D space, it is extremely unlikely that any two plants will ever have exactly the same initial state of genome and environment. Now, due to the way chaotic systems behave (check out the butterfly effect - http://en.wikipedia.org/wiki/Butterfly_effect) these variations, no matter how minute, will result in noticeably different plant models, once they have been grown.

In short, the system offers a potentially unlimited variation of plant species and a system that (in reality) guarantees that no two plants will ever be identical, while all plants of a given species will always have the same macro-level traits making them clearly recognizable as belonging to the same species.

Three different Natural Growths of the Autumn Glory species, each grown 15 cycles

To be continued...

In “Part 2 – the advantages of Chaos”, I will try to give some examples of how this impacted our work as developers, as well as the user-experience. This to try to answer the question “Can chaos empower the user-experience?”, before serving up a few thought on where systems like this could take us.

I hope you will return for part two...

Read more about:

Featured BlogsYou May Also Like