Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

Some thoughts on how game design practice has been shaped by business, technology and culture.

Here I share some thoughts on how game design practice has been shaped by business, technology and culture. First published in the book Critical Hits: An Indie Gaming Anthology, edited by Zoe Jellicoe, foreword by Cara Ellison. Available for purchase here.

In 1884, an English man called George Sturt inherited a two-hundred-year-old wheelwright’s shop from his father. A wheelwright was an artisan who made wheels for horse-drawn vehicles — carts and carriages. Sturt had been a schoolteacher, and was entirely new to this business of making wheels. As a man possessed of both a wheelwright’s shop and the enquiring mind of a scholar, Sturt set out to learn not only how wheels were made, but also why they were made that way. Finding people to show him the wheel designs and how they were constructed was not difficult. But he could not find anyone to explain why, for example, cart wheels were a strange, elaborate dish-shape — like saucers. Why were they not flat? At first glance the strange shape seemed to have some clear disadvantages. Not only did it make them more time-consuming to build, they had to be positioned on a cart in such a way as they jutted out from the side at a rather odd angle. And yet they did their job remarkably well.

Sturt developed his own theories to explain the strange design, which he recorded in his 1923 book The Wheelwright’s Shop, and in the decades since then designers and engineers offered their own theories. While Sturt was destined to never truly understand the secret logic encoded in this wheel design, he quite happily continued using it to make wheels over a number of years. In doing so he was following the tradition of generations of wheelwrights before him, whose knowledge and skill were accompanied by that other enduring tradition of the artisan: an almost complete lack of theoretical understanding.

We no longer have wheelwrights and we are no longer making wheels in shapes we don’t understand. This is because design practice (the way designers design) in any discipline — from the creation of wheels, buildings and furniture through to film, music and literature — develops over time. It reaches milestones; it passes through phases. Until around a decade ago videogame design looked as if it was about to begin the evolutionary shift that other design disciplines had made before it. But this did not happen. A confluence of major changes to the world of videogames — technological, industrial and cultural — took place, setting game design on a very different course. To understand what happened, we need to turn the clock back to the late 1990s and early-to-mid 2000s, before these major changes had occurred.

Let’s imagine a console game (for PlayStation or Xbox, for example) being made in an averagely successful game development studio.

Game design would usually kick off with a pitch for a game idea, taking the form of a document, some concept art and perhaps even a video mock-up or prototype. This pitch (and some business due diligence) was usually enough for a publisher to decide whether the game was worth financing. Once the project was green-lit, the first phase of development, ‘pre-production’, would begin, and a small team of designers would produce a several-hundred page game design document. This document was used as a blueprint for the production of the game and project planning; that is, for breaking down the elements of the game so that they could be costed and scheduled, and as a means of communicating all the elements of the design to the publisher so they knew what they were getting. A prototype, or perhaps more than one prototype, was then produced.

Based on this prototype and the, by now hefty, design document the game would go into development for the next year and a half or more, with a team of several to several dozen, or even hundreds, of game developers.

Many months or years into this process, the project reached the milestone known as ‘alpha’, meaning that the game was considered to be ‘feature complete’. All major functionality would have then been implemented, and the game was playable from beginning to end. It was often not until this point that designers could playtest and evaluate their game design. Frequently, the gameplay would require some major changes.

Design changes mid-project could send development into disarray. Project managers were naturally wary of any changes that would result in having to throw away months of production work. Besides, the project may at this point already be running late, and some features were already being cut from the game, leading designers to despair. Programmers were now working weekends and nights, for months on end. Occasionally, after months or even years of work, the now fully-playable game might reveal itself to be so irretrievably bad that the publisher would cancel the project altogether and cut their losses.

This process that I have just described — the process being used for most game development projects at the turn of the 21st century — was beginning to stagger under its own weight. Ploughing significant resources into a game months before you could be fully confident that the game design was not fundamentally flawed was risky. As teams grew and development budgets rose from millions to tens of millions, publishers became wary of backing projects that took creative risks. It was safest to make games with the kind of mechanics that we already knew worked — because we’d already played them in other games. Innovation, many observed, was suffering as a result.

Things going awry in Game Dev Story (2010), a game development studio simulation game.

At the core of the problem was a conflict of interest between the increasingly demanding needs of production and the needs of design — it was a broken relationship. But game designers were not the first designers to find themselves needing to rethink the relationship between designing and making.

In comparing traditional building design to modern architecture in his 1964 book Notes on the Synthesis of Form, Christopher Alexander makes a distinction between two kinds of design cultures: ‘unselfconscious design’ and ‘self-conscious design’. ‘Unselfconscious design’ (or ‘craft-based’ design) concerns the use of traditional building methods, in which the designer works directly on the form (so, if you were a wheelwright, the wheel) unselfconsciously, through a complex two-directional interaction between the context and the form, in the world itself. The design rules and solutions are largely unwritten, and are learnt informally. The same form is made over and over again with no need to question why; designers need only learn the patterns. They know that a specific design element is good, but they do not need to understand why it is good.

They know that a specific design element is good, but they do not need to understand why it is good.

Igloos are a good example of unselfconscious design.

In unselfconscious design, design traditions and conventions stand in place of design theory. With no understanding of underlying design principles, modifications to, or removal of, a design element can be risky. This means that patterns and rules of design evolve very slowly. And while rules and conventions can develop gradually over time to attain a high level of design sophistication, as it did with the cartwheel, this is not accompanied with a deepening understanding and sophistication in the design principles that govern the patterns and rules.

Modern designers of wheels are not like their unselfconscious designer predecessors, the wheelwrights. They practice what Alexander calls ‘selfconscious design’. In this modern design culture, design thinking is freed from its reliance on making. Thanks to their design thinking methods and tools, an engineer or an architect does not need to build the bridge or house they are designing in order to test whether it will fall down. In the worlds of architecture and design this not only opened up possibilities for bold creativity and innovation, it facilitated the rapid adaptation of designs to changing social, technological and environmental requirements and fashions. Self-conscious design runs right through the arts as well. Consider a composer’s ability to write pages of orchestral music at a desk, without needing to literally hear the music they are writing.

In 2004, game designer Ben Cousins used Alexander’s distinction between traditional and modern design practices to criticise the videogame design and development process of the time. In a short blog post, Cousins suggested that game developers are still operating under an unselfconscious system of design. He described a game design culture that was rife with anti-intellectualism, where the ‘elders of game development’ were intolerant of any deviation from traditional process, and where ‘academic, scientific and analytical techniques are not allowed’, delaying ‘the search for true knowledge’. He argued that the major difference between designers in other media and those in games was the fact that we had not yet progressed to self-conscious design.

Cousins was not alone in this observation. It had not gone unnoticed that, despite the rapidly increasing technical sophistication and production values of videogames being produced, game design itself remained relatively under-developed. Back in 1999, designer Doug Church had published an influential piece in the industry’s trade magazine Game Developer, calling for the game design community to develop what he called ‘formal, abstract design tools’. Church warned that our lack of formal design understanding would hinder our ability to pass down and build upon current design knowledge, and that our lack of even a common design vocabulary was ‘the primary inhibitor of design evolution’.

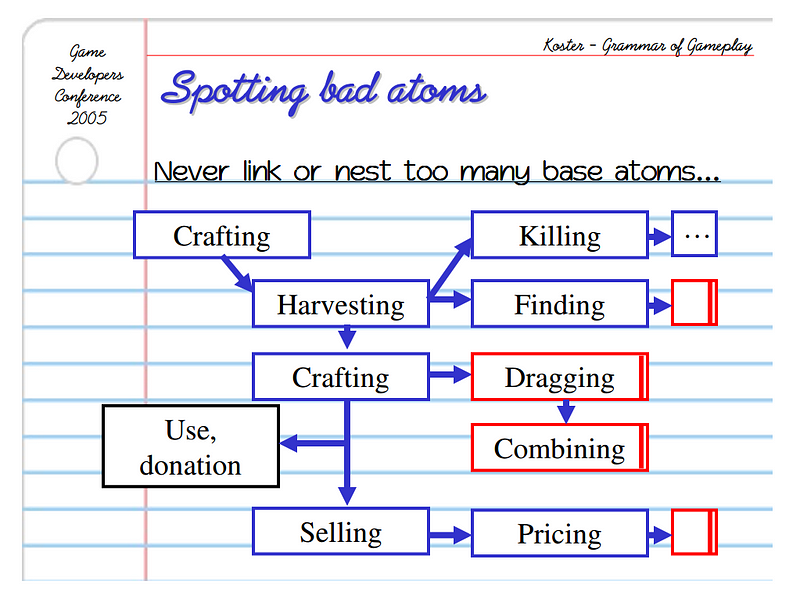

In the years that followed, others joined the call. Designer Raph Koster highlighted the imprecision of natural language as a tool for designing gameplay, and proposed we develop a graphical notation system for game design. Designer Dan Cook went so far as to call past game design achievements ‘accidental successes’. Cook’s observation that ‘we currently build games through habit, guesswork and slavish devotion to pre-existing form’ is an apposite description of how unselfconscious designers work.

As Alexander explained it, an unselfconscious designer has no choice but to think while building — they design by making. But in self-conscious design, a designer has ways to solve their design problems without relying on making, or having to think through all the moving parts of a design problem just in their head.

To some extent, writing down ideas to think them through can help (such as in a game design document), but game designers were finding this process woefully inadequate. What self-conscious designers have that we did not was a means of ‘design drawing’. Design drawing does not literally mean a drawing; it could be a sketch or model of a building, musical notation or indeed any kind of material that gives a useful representation of the current state of your design thinking (your ‘design situation’). Design drawing is not primarily for communicating the design to other people — it is part of the design thinking process. You, the designer, can engage in a private design ‘conversation’ between you and these ‘materials of design’ that you are creating and modifying.

Design drawing is a designer’s superpower. In his book How Designers Think, Bryan Lawson describes how the advent of design drawing liberated designers’ creative imagination ‘in a revolutionary way’. It afforded them a greater perceptual span, enabling them to make more fundamental changes and innovations within one design than was possible in traditional design-by-making style practice.

Some game designers and academic researchers began to see if they could answer Church’s call for design tools. They proposed ideas for developing semi-formal, sometimes graphical methods of modelling and describing gameplay. There were game designers who were trying to work out how to devise a kind of ‘grammar’ of gameplay that could help us break it down into basic units (‘verbs’, ‘ludemes’, ‘atoms’, ‘choice molecules’ and ‘skill atoms’). Others were attempting to abstract and formalise cross-genre design concepts, giving design talks with names like Orthogonal Unit Design. Project Horseshoe, an invite-only annual event ‘dedicated to solving current problems in game design’ that gathers some of the community’s most well-known figures, set themselves the task of figuring some of this stuff out.

A slide from Raph Koster’s Game Developers Conference talk from 2005, “A Grammar of Gameplay”

Did these early attempts build momentum and grow into an industry-wide shift towards self-conscious design in games?

No, they did not. Game design today is not a self-conscious practice in which designers use design drawing tools to support their design thinking as an alternative to design-by-making. No language of game design, nor anything that could be described as ‘formal, abstract design tools’, has been adopted into mainstream game design practice. In fact, in game design today the make-and-test design approach of unselfconscious design has become even further entrenched. But with no adoption of new ways to better support design thinking that didn’t involve making, how did our increasingly unwieldy and expensive process of design guesswork not eventually fall over from its own weight?

This is because, in the last ten years, some major changes occurred that have, at least temporarily, delayed or masked the need for a shift towards self-conscious design. These changes have allowed us to mitigate some of the inefficiencies and slow-paced evolution of unself-conscious design by driving a huge increase in the speed, volume and sophistication of both making and testing. If we were wheelwrights, this would be akin to having a phenomenal capacity to build wheels and test incremental adjustments to them, on a massive scale at high velocity. In other words, we now engage in a kind of ‘brute-force’ unselfconscious design practice, where our design evolution and innovation has come to rely less upon any advancement in our theoretical understanding and sophistication as designers, and more on our sophistication as makers.

The first major change to the way we make games came from outside the game industry — via the software industry.

Let's once more review the state of the game development as it was in the early years of this century. Many people felt that the way we made games was fundamentally broken. Game development projects were struggling, producing job instability, scheduling blow-outs, a 95% marketplace failure rate and a work culture of long hours so notorious it was making headlines in the mainstream press.

The fact that game industry project managers were usually young and lacking in formal training was undoubtedly a factor. But even the best software development project managers might have struggled with the process being used in game development at the time. This stage-based process that I described earlier took its cue from conventional software development processes that involved comprehensive planning at the beginning stage of the project — the ‘Waterfall’ model. Team members were required to understand and predict how long it would take them to solve a design or engineering problem before they had started working on it.

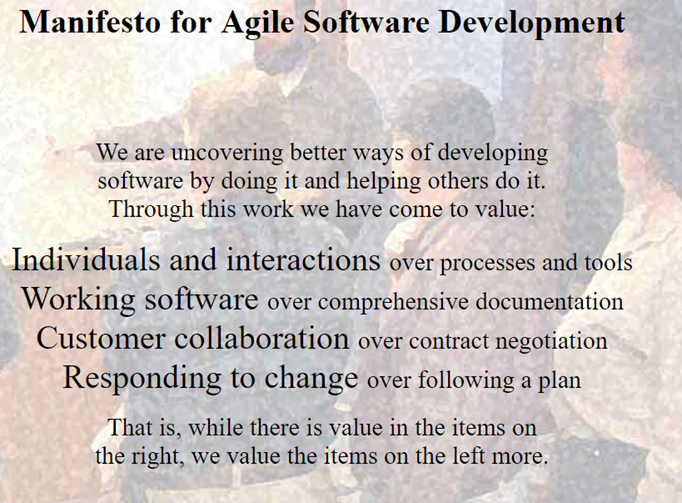

Critics of this process within the wider software industry were beginning to call this absurd and impossible, the cause of scheduling blowouts and other project management issues. Core to the range of new ‘agile’ software development frameworks that emerged in response was to replace elaborate planning and extensive documentation with the rapid, iterative creation of ‘working software’ so that it could be tested as often as possible, modified as needed for a new version, then tested again. This could be distilled into ‘iterate and test’. Make it, test it, change it, repeat.

It is hardly surprising, therefore, that when agile development became fashionable within the software industry, game developers — and designers — were enthused about this new iterative approach. Not only did it offer an escape route out of our development cycle of pain, it put playable versions of the game into the hands of designers right the way through the production cycle — a far cry from having to wait months for the alpha. Some game designers saw this as the salvation they had been waiting for: finally, hands-on creative control over a process they had seen gradually slipping away from them.

The Agile Manifesto (2001). Not only did it teach us to embrace iterative development, it also served as an alarming reminder of why you should never leave a programmer alone in a room with a Photoshop filter.

But this could only partially address the increasing costs and time spent on producing something playable. In the early years of this century, making even a modest game prototype was still very expensive. But this all changed. Over the next decade changes in game production, labour, distribution and the digital games market transformed our ability to iteratively make and test.

Take game engines, for example. In the early 2000s they were costly whether your studio developed one itself, or licensed one from another company. The widely-used game engine Renderware cost tens of thousands of dollars to use per title. Even the Torque game engine — the low-fi, ‘budget’ option aimed at independent developers — would set you back $1,500. And if you wanted to include video in your cutscenes? Sound? Physics? Developers also needed to pay thousands in licensing fees for those technologies. A level or world-building tool? Build your own.

Renderware was the engine you licensed if you didn’t have the resources to build your own game engine. That is, until Electronic Arts bought the studio that made it and took it off the market.

Compare that to today: two of the leading professional game engines — Unity 3D and Unreal Engine — are more or less free for anyone to use. No longer are they bare-bones, either; they do a lot of the heavy-lifting that used to take a large amount of time and skill from programmers. Modern game engines can allow a complete beginner to build (albeit within very tight creative constraints) a playable experience within a few hours.

Thanks to these advances in production tools, the ability for designers to better understand a game design idea via the act of building and playing it has become significantly more accessible.

Other changes to the industry have accelerated the making and testing cycle. In particular, the emergence of digital distribution has meant that developers can now make much smaller games in a much wider variety of genres, and get them to players faster.

Until recently, videogames were more-or-less exclusively sold in boxes in retail stores — where shelf-space was sewn up by corporate distribution deals. A notable exception to this emerged in the late 90s and early 2000s: the sale of so-called ‘downloadable games’ by online game publisher-distributors like Big Fish. These games, which included titles like the original Diner Dash, were targeted at ‘casual gamers’ (then somewhat sneeringly described as the ’40 year-old housewife market’). Meanwhile, Flash games were attracting a smaller, younger community of enthusiasts via browser game portals such as Kongregate.

But as the Internet got faster and bandwidth got cheaper, selling downloadable games online was starting to become a realistic option for the much bigger games of the ‘core’ market. Valve’s digital distribution platform, Steam, opened up to third-party PC games in 2005, and the launch of the iPhone in 2008 saw online distribution of games go mainstream. Microsoft, Sony and even Nintendo started to curate digital distribution sub-platforms on their game consoles. PC games, which many industry pundits back in the day were telling us was a dying market, were now suddenly much easier to buy and install, and were given a second wind.

A key characteristic of these digital distribution platforms was that not only did they break the stranglehold of the big publishers, distributors and retail stores, thereby clearing a path-to-market for independent developers, they were also ostensibly ‘open’ (though still regulated) platforms that anyone could make and sell games on. These changes were fundamental to enabling the phenomenal rise of indie games.

Until around a decade ago, independently published games had what were perceived to be all the worst possible traits: small-scale, low-fi graphics, retro or niche genres and generally only available on PC. To the industry, this made them virtually unmarketable, except to a tiny audience of nerdy die-hards. Indie games were such a non-starter that an article with the title ‘Why There Are No Indie Video Games’ appeared in Slate magazine in 2006. In 2005, Greg Costikyan, a leading figure in the movement to establish an independent games industry, spoke of the need to cultivate an ‘indie’ aesthetic similar to that of film and music, that made the audience feel as if they were part of a hip, discerning fan subculture. He was right and this did happen, just as he said it would. Over the last ten years, each one of those ‘bad’ traits — small, 2D, low-fi, retro and so forth — has been successfully rebranded into a hip, indie aesthetic. Digital distribution played a major role in this.

In 2006 you could write headlines like this.

Together, all these changes to production and distribution, to the character of games and their audiences, have contributed to a massive increase in the volume and frequency of games being designed, produced, and brought to market. For example, 2014 saw an average of 500 game launches per day on the iTunes App Store; in 2015 Steam averaged eight daily releases.

Not only did the Internet help significantly widen the volume and variety of games we make, it also helped super-charge the other part of the make-and-test design paradigm: testing. While user testing has long been used in game development, the Internet and the technologies it has spawned have enabled testing to be applied to the design process in new ways. Testing has been extended and formalised into a powerful design tool, as part of the iterate-and-test formula now generally accepted as best practice in game design.

This is nowhere more apparent than in techniques used in ‘data-driven design’ — design based on analysing the behaviour of players using data they generate as they play.

Data-driven design is native to ‘freemium’ games (games like Farmville, Candy Crush, Clash of Clans and League of Legends) that use a ‘games-as-a-service’ model. The design of these games is not static, as boxed games used to be. The game is designed as a continuously evolving entertainment service that gathers live data from its users, driven by a design process that continues indefinitely after the game has been launched. Because players pay via in-app purchases rather than buying the game up-front, the designer’s task is to maximise both the money (monetisation) and time (retention) that players spend in the game. To help us finely tune these design decisions our industry has borrowed marketing optimisation techniques from online industries. One of these is A/B testing, in which variations of design modifications are run simultaneously to see which one results in the best retention and monetisation.

This data-driven approach to game design is no longer confined to a small section of the industry. As online play becomes the norm, and as free-to-play monetisation gains wider traction across more gaming markets, genres and platforms, working with player metrics data is being increasingly integrated into our game design process.

Another trend that integrates live user testing into the heart of our process is the practice of ‘open alpha’, which is currently popular in indie game development. Players pay to access to an alpha-stage (playable, but not finished) build of a game, which in turn helps developers fund the game’s completion. An open alpha also acts as a live testing platform, similar to games-as-a-service. A large part of the design success of Minecraft, for example, can be attributed to the adjustments to its design made based on feedback from the community during its lengthy open-alpha period.

Making testing a more central part of our design process has bolstered our crafts-based approach. It has formalised and amplified — and, in some sectors of the game industry, mandated — an unselfconscious design method of tiny iterative adjustments.

Does this new testing regime mean that making games is now less risky? Have the new platforms, new markets, digital distribution and the emergence of indie gaming loosened the market stranglehold that publishers and distributors once held? Have we escaped the curse of being a hit-driven industry? No — not really. At least, not in the way we might have hoped.

Mobile game developers today find themselves frustrated by a winner-takes-all market, in which big companies maintain near-total dominance of App Store charts. Meanwhile, Steam usage stats reveal that the market for new games is only a tiny minority of the PC gaming audience. The vast majority of games released do not break even.

Making money from games is as creatively and commercially risky a business as it ever was. The difference between then and now is in who bears the costs of these risks. Increasingly, it is developers themselves.

Those publishers and investors who would previously have stumped up millions in production funding on the strength of a pitch and the quality of a development team are increasingly demanding that some form of the game already be produced and proven in the market — for example, interest generated in the game via a crowdfunding campaign, player metrics from a soft launch or release on a single platform — before they will agree to publish or invest. This is common in mobile game publishing, and there are signs of established AAA publishers following this trend. There is an increasing unwillingness to front the money and take a risk on an unproven game.

What is giving publishers this kind of leverage? And, in a risky market, what is stoking the industry’s giant furnace of game production, with its enormous increase in the volume and pace of output, most of it doomed never to break even?

Excerpt from Chris Crawford’s essay “The Education of a Game Designer” (2003)

To answer these questions we first need to understand something unusual about the demographic character of the game industry. Over the last decade or two, our industry has undergone some major changes. One thing that has not really changed, though, is how young (and, as many will observe, white, male and middle-class) developers are. People who look at the young faces in our industry might assume we are a ‘young’ design culture in a historical sense. But that is no longer actually true; we are kept artificially young.

Our game developer ancestors did not disappear due to death or retirement. They still walk among us; they just no longer work alongside us. It is almost as if we are an industry haunted by the ghosts of living former game developers. What if these generations had remained, instead of leaving the industry before reaching middle age? If they had, game development might not be such a strikingly young scene. As a group, we might have more closely resembled our greying technical and creative counterparts in other entertainment industries like film and television, who also face ageism but not to such a brutal extent.

Game developers receiving their career achievement awards. (Alternatively, the 1976 film Logan’s Run)

In 2004 game development careers lasted an average of five years or less. A major reason for this was that game developers burn out: salaried employees were expected to work excessively long hours for relatively low pay, and were frequently laid off at the end of projects. The industry today still shows few signs of offering long, sustainable careers, in which its creatives can expect to spend decades deepening and maturing their design practice. We are expected to reach our full creative potential early and fast. While this makes us relatively cheap and disposable, the downside for the industry is a problem of supply. As the fruit flies of the screen-based arts, our numbers need to be regularly replenished in order to enable and maintain the industry’s heightened activity. How has this been achieved?

Firstly, it has been achieved by making game development a highly visible, highly desirable activity. A career in game development, previously a niche activity for nerds and computer science drop-outs, has undergone a remarkable image change. As Hasbro will tell you, based on a 2015 survey they did to update the careers featured in their popular family board game The Game of Life, the number-one career preferred by kids is ‘videogame designer’.

While there are status benefits to your profession now being seen as every kid’s dream, there is also a downside. Dreams are goals you are supposed to aspire to while you are young, before you finally give them up to get a ‘real’ job. When the world perceives your line of work as not so much about making a living as living a dream, your once-reasonable expectations of a sustainable career can start to seem unreasonable — even entitled.

There is another way in which the industry benefits from this wave of interest in game design careers. The game design dream has helped ensure the rise of a new industry that enables and serves the expanded productive capacity of the game industry. Before entering the industry proper, the dream’s young adherents are chaperoned into a feeder industry with enormous productive capacity of its own: the games education sector.

Degrees in game development are relatively new. Until a decade or so ago, game-specific qualifications were only offered by a rare few universities. The first decade of the century saw an explosion of game development courses offering graduates a shot at a (likely as not, very short) career in game development.

The emergence of a games education sector is great for the game industry. It has created a large pool of reserve skilled labour, and allowed the industry to outsource its highly vocational training and associated costs to institutions, students and their families. There is also a more subtle and pervasive benefit that the game industry receives from the games education sector: it supplies the game industry — in the ways it feeds and maintains the indie games scene, for instance — with cheap and free cognitive labour which injects new ideas and innovations into the wider field of game design.

Game developers who are young, cheap and free play well into our present-day context of economic and generational inequality. As members of a new sub-class of workers some have named the ‘creative precariat’, creative professionals are increasingly being pressured into re-imagining their productive labour as something that is no longer necessarily exchanged for pay directly. Instead, we are to build social capital, personal brands and professional profiles that we hope will lead, indirectly and even somewhat mystically, to payment — like a kind of economic karma.

“Game developer” was Barbie’s official Career of the Year for 2016.

What does it mean for an industry to find itself with new and virtually inexhaustible sources of cheap, free labour? It means that herein we have our final, key element powering the ‘brute-force’ of our brute-force unselfconscious design practice. It is a practice in which evolution and innovation is being driven not by intellectual or technological advances in design itself, but by the sheer volume and speed of designs produced and tested, within a design culture increasingly characterised by mass-participation game jams and intensive player-metrics harvesting. This brute-force approach is allowing us, as a design culture, to mitigate the inefficiencies of the craft-based design process — inefficiencies that hold back the pace of evolution in traditional unselfconscious design cultures.

Imagine the industry is a computer. Our design ‘algorithm’ may be inefficient and old-fashioned, but why waste time optimising it now that we have a dramatically faster machine to run it on?

Is this the inevitable present and future of game design? Or might we take it down a different path?

Design by making can be a wonderfully satisfying process. But it should not be the only process available to us. While it may currently suit the industry for game designers to remain in a state of stunted development, we should be demanding more of ourselves — and more from our industry.

It is time we returned to this unanswered question of formal, abstract design tools for game design. Until we have the tools to design with our minds and not just with our hands, we are limiting ourselves creatively. In games, these limits include having our creative process held hostage by the oftentimes alienating and frantic churn of the production and testing cycle.

Having been exposed to data-driven design, which some designers have experienced as having a negative impact on their process and their creative agency, our natural instinct may be to feel wary of or resistant to the idea of any and all formal approaches to design thinking. But the answer to tools we do not like is not no tools; it is better tools. We can demand research and development into design support technology — not for more tools for prototyping and production or metrics, but for tools that support design thinking.

With conceptual and computer-aided design tools, we could extend our design thinking beyond the constraints of current practice. We could model difficult design problems, and take more creative risks. A tiny minority are still attempting this, albeit on the fringes of industry. The tool Machinations is a notable example of this work, as are recent experiments in ‘mixed-initiative design’ by a handful of artificial intelligence researchers within academia. While last century’s call for design tools may have long been forgotten by the mainstream of game design, some of us still want to believe.

Read more about:

Featured BlogsYou May Also Like