Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

How I improved my efficiency as an Engineer working in VR by leaving the headset on the floor more often.

My job at RareFaction Interactive is to serve my teammates' Engineering needs by writing solid, maintainable code that satisfies whatever feature requirements we're looking to tackle.

For several years, the lion's share of my work has been in VR. I spend a decent chunk of my workdays implementing interaction designs, gameplay features, and polishing "narrative pacing" (that's a fancy term for level scripting / triggering events - you can use that one royalty free). Needless to say, I've probably taken off and put on the Vive / Rift / GearVR / more times than I'd like to count. Last year, I resigned myself to wearing my Vive pre as a hatvisor of sorts, in anticipation of my next round of functional testing for some feature I was working on.

Don't I look happy?

The fact of the matter is that debugging in VR for an Engineer can be a particularly unenviable, frustrating, and inefficient task. In this blog post, I'll offer a solution that works for me in sanity testing interaction design during development of our Unity-based projects.

First, and at the risk of being too verbose (a quality I've been endowed with since birth; sorry), let's enumerate the 9 step process of standard feature development in VR. Hyperbolic anecdotes have been included, free of charge:

Write Code which compiles and instills some level of faith in you that it may work.

Perform the headset donning ritual. Coerce your glasses into fitting inside the headset in such a way that they are only slightly crooked.

Tilt your head way up and peek at your screen from the small gap of daylight which exists just below your nose. Find your mouse cursor, hover to the play button in Unity and click it. If you're savvy enough to press Ctrl+P while peeking through your headset, do that instead. Your test scene is now running. Celebrate by exclaiming some minor profanity.

As you attempt to pick up your Vive/Touch Controllers, make reasonable attempts to avoid hand contusions from the edge of your desk while avoiding knocking over anything containing liquid all over your keyboard. Once you actually have the devices in hand, interact with some object or actor in your test scene. You may hit a breakpoint in your code; this will freeze your in-headset view, causing a near-instant sensation of virtego and/or nausea.

Remove your headset in order to view your code and the breakpoint on which it is focused. Your glasses have undoubtedly fallen on the floor as you removed your headset; pick them up without rolling over them with your chair.

Step through / over / into your code, using the immediate window and call stack as needed to satisfy your inquisitiveness. Once certain that you've interacted with the intended object and that your inputs into your interaction behaviors are correct, press continue or F5 to let 'er rip!

Repeat step 2 to view the result in-headset, or look at the Game View in Unity to see what happened.

Your intended result was not achieved. Additional exclamations of profanity are optional, yet likely.

Go back to step 1.

Yes: it can be an epic pain to debug in VR. Allow me to propose a solution which may assist you in performing basic tests on your interactive code without donning a headset, cursing, or breaking your hands / glasses / computer.

You most likely have some platform agnostic input code attached to the visual, in-game representation of your Touch / Vive / GearVR / Daydream controllers. This code probably detects both collisions and raycast intersections with interactive objects in your game, and sends messages to said objects. I'd venture to guess that these interactions include (but are not limited to):

Touching an interactive object

Touching said object and then pressing a Trigger

Pointing at an interactive object

Pointing at said object and then pressing a Trigger

Let's consider a C# Interface which your interactive behaviors could implement to satisfy the abovementioned interactions:

public interface IInteractable

{

//Handedness is an enum: Left, Right.

void PointStart(Handedness handedness);

void PointEnd(Handedness handedness);

void PointTriggerStart(Handedness handedness);

void TouchStart(Handedness handedness);

void TouchEnd(Handedness handedness);

void TouchTriggerStart(Handedness handedness);

void TouchTriggerEnd(Handedness handedness);

bool CanTouch { get; }

bool CanPointAt { get; }

}

Let's also consider a concrete implementation of IInteractable, represented as an abstract base class from which all of our interactions shall derive:

public abstract class CInteractable : MonoBehaviour, IInteractable

{

public virtual void PointEnd(Handedness handedness){}

public virtual void PointStart(Handedness handedness) { }

public virtual void PointTriggerStart(Handedness handedness) { }

public virtual void TouchEnd(Handedness handedness) { }

public virtual void TouchStart(Handedness handedness) { }

public virtual void TouchTriggerEnd(Handedness handedness) { }

public virtual void TouchTriggerStart(Handedness handedness) { }

protected bool _canPointAt = true;

public bool CanPointAt { get { return _canPointAt; } }

protected bool _canTouch = true;

public bool CanTouch { get { return _canTouch; } }

}

Now, obviously you won't (and can't - it's an abstract class) put a CInteractable script on your game's interactive objects; CInteractable doesn't actually do anything but facilitate. You may be asking, "Why are CInteractable's methods not abstract?" There are two reasons, the first being that making the methods virtual allows a class which derives from CInteractable to not implement an override. For example, if some interaction behavior doesn't care about when the user stops pulling the trigger while touching an object, then TouchTriggerEnd need not be overriden (lllustrated in the example to follow - ColorChangeInteraction). The second reason is more complicated, involving Reflection and Custom Unity Inspectors; I will return to this later.

Let's consider an interaction behavior which actually does something. Here's a simple script, ColorChangeInteraction, which changes an object's color upon interacting with it in different ways. Please note that ColorChangeInteraction derives from CInteractable which as you'll recall derives from MonoBehaviour and implements IInteractable:

public class ColorChangeInteraction : CInteractable

{

[SerializeField]

private Color _touchColor = Color.magenta;

[SerializeField]

private Color _triggerColor = Color.yellow;

private Color _defaultDiffuseColor;

private Renderer _renderer;

private void Awake()

{

if(_renderer = GetComponent<Renderer>())

{

_defaultDiffuseColor = _renderer.material.color;

}

else

{

print("No Renderer on: " + name);

Destroy(this);

}

}

public override void TouchStart(Handedness handedness)

{

SetColor(_touchColor);

}

public override void TouchTriggerStart(Handedness handedness)

{

SetColor(_triggerColor);

}

//and so on for any other IInteractable methods I care about...

void SetColor(Color color)

{

_renderer.material.color = color;

}

}

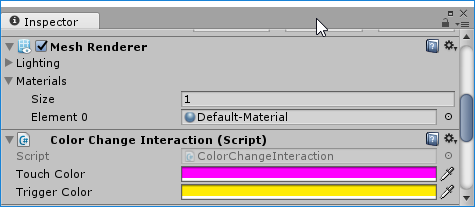

The Unity Inspector window for this script looks like the following image. Nothing groundbreaking here:

Following authorship of the aforementioned interface, abstract base class, and implementation (ColorChangeInteraction), you would simply add a ColorChangeInteraction (and any other interactions you have written) to an object in your scene with which you wish to interact. Your input code - simply put - "sends messages" to scripts derived from CInteractable on said object when you touch it, or press a trigger while touching it. Your controller input code might look something like this:

private void OnTriggerEnter(Collider other)

{

IInteractable[] interactables = other.GetComponentsInChildren<IInteractable>();

System.Array.ForEach<IInteractable>(interactables, i =>

{

i.TouchStart(Handedness.Right); //assume the right hand for simplicity's sake.

});

}

So far, we have laid down a general framework which allows us to add any number of interaction behaviors to an object, and call functions on those behaviors when interactions such as touching take place. Now, let us address the crux of the matter: how can we test ColorChangeInteraction or any other interactive behaviors without following the 9 step program I outlined in the beginning of this article?

The answers lay in what I hope you'll find is a creative use of .NET Reflection coupled with Unity's Custom Inspector feature. If you're unfamiliar with Unity Custom Inspectors, have a look - here's a quick read/video. If you're unfamiliar with .NET Reflection - that's a slightly more complex topic, upon which we fortunately only touch the surface. .NET Reflection allows (amongst other things) a class to get metadata/information about other classes. In the simplest relevant example, class A can retrieve a list of the public methods contained in class B, using a GetMethods() function.

Let's consider a Unity Custom Inspector class, InteractableEditorBase<T> which takes advantage of C# generics for the purpose of reuse and reflection. Note: the #if UNITY_EDITOR preprocessor directive at the top of the following script allows you to place this script wherever you please in your project, rather than explicitly in a folder named "Editor" as per the Unity documentation.

#if UNITY_EDITOR

using UnityEditor;

public class InteractableEditorBase<T> : Editor where T : CInteractable

{

public override void OnInspectorGUI()

{

DrawDefaultInspector();

T myTarget = (T)target;

int count = 0;

EditorGUILayout.BeginHorizontal();

foreach (MethodInfo MI in typeof(T).GetMethods())

{

if (MI.DeclaringType == typeof(T))

{

if (GUILayout.Button(MI.Name))

{

MI.Invoke(myTarget, new object[] { Handedness.Right });

}

count++;

if (count == 3)

{

count = 0;

EditorGUILayout.EndHorizontal();

EditorGUILayout.BeginHorizontal();

}

}

}

EditorGUILayout.EndHorizontal();

}

}

#endif

The important part of InteractableEditorBase<T> is found in the foreach block. After we retrieve a MethodInfo array (essentially a list of information about public methods in T) from typeof(T).GetMethods(), we iterate through said array, evaluating the DeclaringType property on each MethodInfo.

We are interested in answering the question: "Is the method I'm looking at in class T declared in a base class from which T derives, or from within T itself?" Stated differently, with our class structure in mind: "Is this method declared in CInteractable, or have I explicitly overriden it in a derived class, such as ColorChangeInteraction's overriding of the TouchStart method?

If the method in question's DeclaringType is T, then we would like our Custom Inspector to draw a button containing the function's name, which calls Invoke() on said function upon being pressed.

We're almost done. We have our generic implementation of a custom editor where T is some derived class of CInteractible. Our final step is to derive from InteractableEditorBase<T> for our behavior scripts which actually do something (like ColorChangeInteraction). This is very easy.

Using the preprocessor directive trick mentioned before InteractableEditorBase<T>, we can actually declare a custom inspector for ColorChangeInteraction inside the same .cs file which houses ColorChangeInteraction. We add the following source code above and below the existing ColorChangeInteraction.cs:

#if UNITY_EDITOR

using UnityEditor;

#endif

public class ColorChangeInteraction : CInteractable

{

.

.

.

}

#if UNITY_EDITOR

[CustomEditor(typeof(ColorChangeInteraction))]

public class ColorChangeInteractionEditor : InteractableEditorBase<ColorChangeInteraction>{}

#endif

If we look at the Inspector window for ColorChangeInteraction, our custom inspector will generate buttons for every method we have overriden from CInteractable. Clicking these buttons while our scene is running will actually Invoke the methods illustrated as the Buttons' titles:

What this really means: Rather than putting on one's headset, playing the scene, picking up controllers, and finally interacting with an object... You can just press a button in the Unity Inspector, and leave your headset / controllers wherever they happen to lie.

The following video shows this system in action with ColorChangeInteraction. Additional methods have automatically been exposed to the Custom Inspector - I overrode PointStart, PointEnd, and PointTriggerStart in ColorChangeInteraction - our system is smart enough to pick up those changes and provide new Buttons dynamically.

In the first part of the video, I am wearing the headset and interacting with a series of cubes which have a ColorChangeInteraction script attached to them. In the second part of the video, I remove the headset from my head, place the controllers on the floor (...and pick up my glasses which have fallen) - and use the Custom Inspector to simulate interaction with the cubes.

Hopefully, you can take this basic example and extrapolate upon the ideas I have laid out in this article to save yourself some time, frustration, and pairs of glasses for those of us too scared to get laser vision correction.

This system saves me time for sure, but your mileage may vary. Yes - if your behavior code operates based on hand / headset position at the time of interaction (i.e. - actor looks at player), then this system might not be right for helping you evaluate those behaviors.

Peer review away! I'm looking forward to your questions, feedback, hateful comments, death threats, and other forms of semi-anonymous internet communication.

Best regards,

Adam Saslow

Read more about:

BlogsYou May Also Like