Trending

Opinion: How will Project 2025 impact game developers?

The Heritage Foundation's manifesto for the possible next administration could do great harm to many, including large portions of the game development community.

Featured Blog | This community-written post highlights the best of what the game industry has to offer. Read more like it on the Game Developer Blogs or learn how to Submit Your Own Blog Post

When you need to delete elements mid-sequence you'll maybe reach for a linked list.

Not so fast!

Contiguous buffers is good. Vectors might still be faster, or there may be other possibilities such as 'hosting' your list inside a vector.

Motivation

I actually have two goals in writing this post:

to talk about the general idea of 'hosting' a list within a vector

to introduce a specific data structure that will be reused in my next post

Hosting a list in a vector ain't rocket science, but I think it's a good technique to have in mind when designing data structures generally, and it's definitely a worthwhile topic to include in a series about vector based data containers.

We'll be looking at one specific example, based on this technique, for which I have a fairly specific set of requirements.

The requirements come from a larger use case for mesh representation in PathEngine. We'll look at the overall context for these requirements in more detail in my next post and together I hope that these two posts serve as a compelling example for how vector hosted lists can be used to make a good solution to some concrete real world problems!

What I need is essentially a container for a bunch of elements, but I'll need to:

iterate through the elements

hold on to references to individual elements

add new elements

delete individual elements

without changes to the set of elements (and in particular element deletion) affecting existing references to the remaining elements.

(We'll look at the requirements for the actual element type in more detail later on.)

A straight forward linked list data structure would be fine for this.

With a linked list we can use pointers to list nodes as both iterators and element references, with these pointers remaining valid as references across the removal and deletion of other list nodes.

In his keynote for GoingNative 2012, Bjarne Stroustrup talks about some benchmarks comparing std::vector and std::list. The (perhaps surprising) result is that std::vector works out to be faster in practice than std::list in some situations where lists would traditionally have been the natural choice.

This point is discussed in more detail in this blog post. ('Why you should never ever ever use linked list in your code again', the article is also reposted on code project with some additional discussion in the comments there.)

I recommend reading that blog post for a more detailed discussion of this issue, concrete benchmarks, and so on, but in a nutshell it seems that as the difference in latency between CPU cache and main memory becomes more significant, contiguous buffers become increasingly desirable, and we see vectors out-performing pointer-based lists in many cases where arbitrary non-contiguous element manipulation is required.

So the reduced algorithmic complexity for linked list manipulations can be outweighed by the much lower constant factors resulting from better caching for contiguous buffers.

So should we just switch to std::vector?

Well, of course, all depends on context, and Kjell admits that the title of his post is hyperbole.

My experience is that we ignore algorithmic complexity issues at our peril, and I'd much rather get the algorithmic complexity of linked list manipulations, whilst keeping the advances of contiguous buffers, if this possible.

More fundamentally, apart from being (algorithmically) inefficient, std::vector erase() doesn't actually meet our requirements.

Erasing an element from the middle of a vector results in all the other elements after that being moved down, to close the 'hole'. And that means that (whether we use element indices or pointers as element references), references to elements after an erased element will be invalidated (or at least need to be adjusted) after each element deletion.

So a straight switch to std:vector doesn't work here. We need some of the detail semantics of linked lists with regards to iterator validity, in addition to performance characteristics.

It turns out that we can actually take any list data structure (i.e. a data structure based on fixed sized nodes and pointers to these nodes), and convert it to a vector hosted version.

The vector buffer takes the place of the memory heap, and selecting an unused entry in the buffer takes the place of arbitrary memory allocation.

And we can then also use element indices in place of generalised pointers for node references, so that these node references remain valid across vector capacity changes.

So another option could be to take some standard linked list code, and translate this to a vector hosted version. Let's look at this in some more detail.

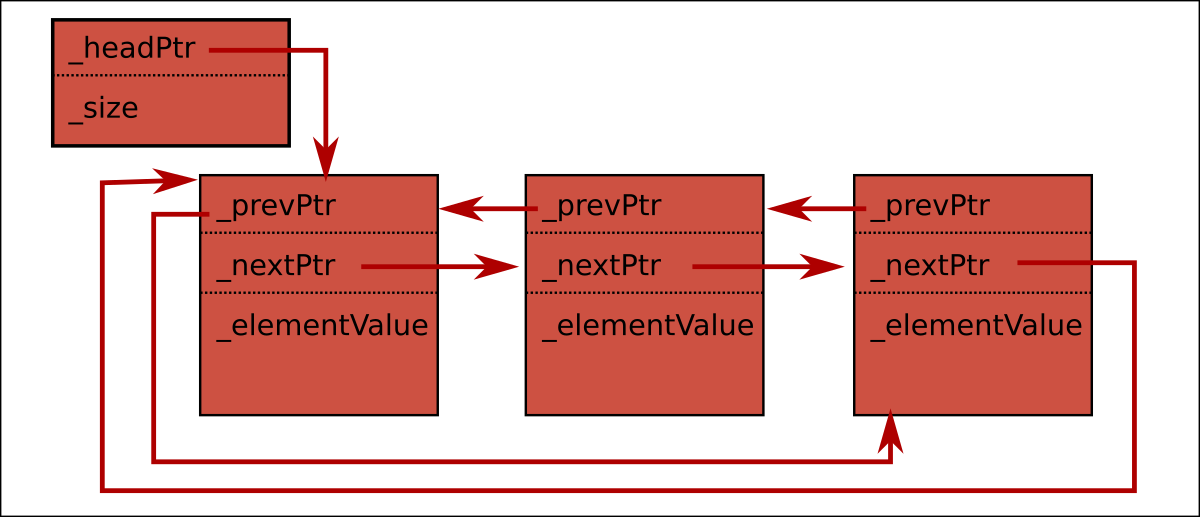

If we have a double linked list structure that looks something like this:

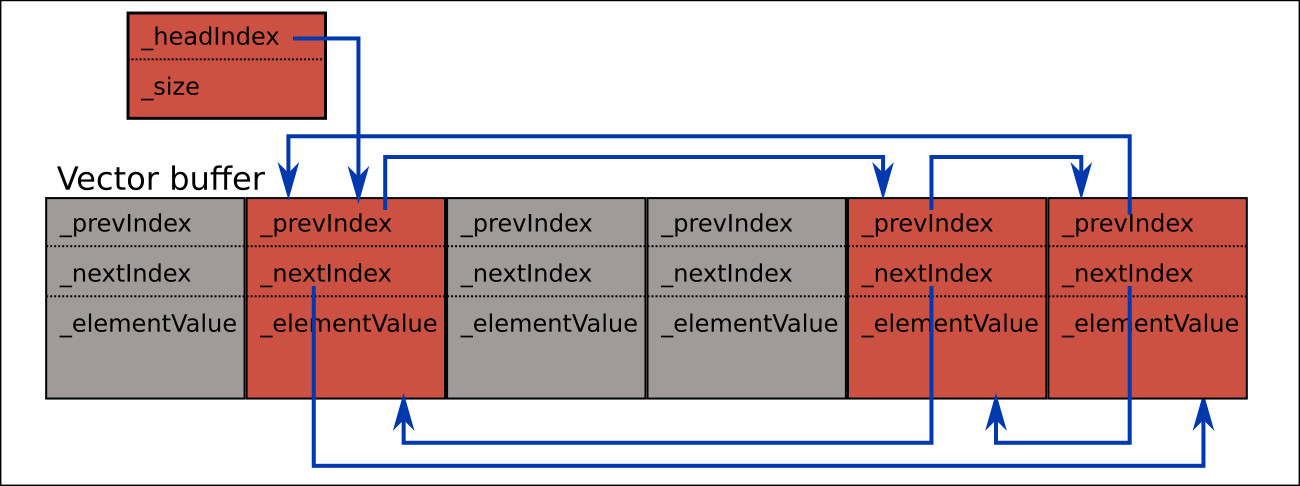

We can 'host' this in a single contiguous vector buffer as follows:

We can start by allocating buffer entries in order, but after entries are deleted there are going to be some holes (shown here in grey) and then we need to do our own 'memory allocation'.

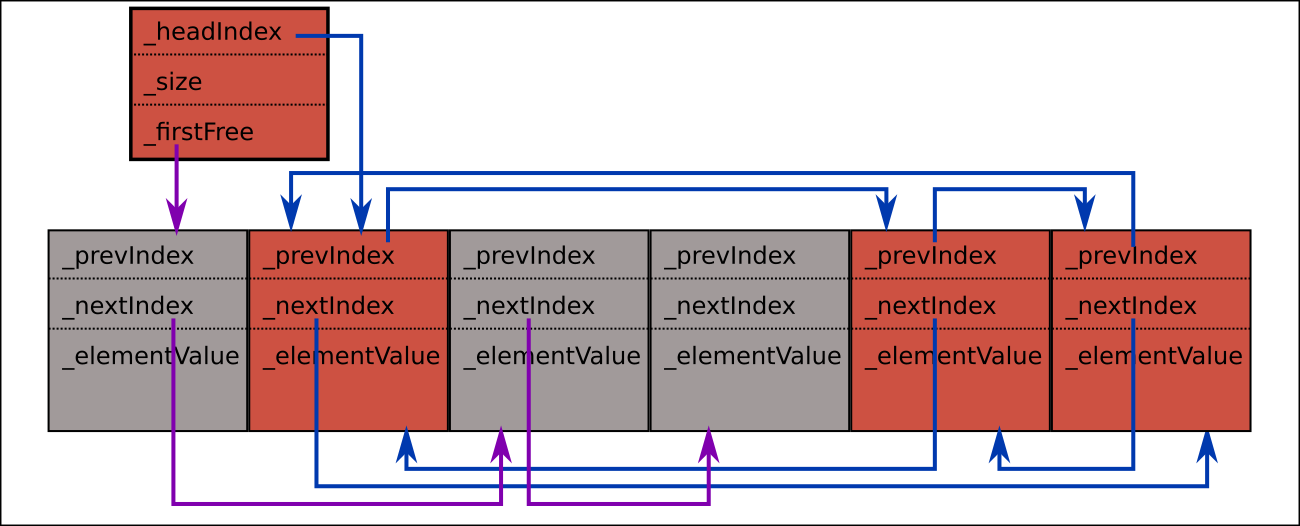

It's much simpler than general case arbitrary memory allocation, though, and all we actually need to do for this is to chain the unused entries together in free list.

A singly linked list is sufficient for this purpose, and we can reuse _nextIndex:

To 'allocate' a new node we take the element at _firstFree and update _firstFree to the next in the free list (or, if there are no free elements, push a new element on the end of the vector).

If we have a bunch of linked list manipulation code that was designed for the original layout (a traditional pointer based list), it's straightforward to adapt this to the new 'hosted' layout. We basically just:

change iterator type (and associated increment, decrement and dereference operations)

switch to the free list based 'memory allocation' strategy.

So I guess a first point to make here is that 'hosting' a list data structure in this way is really not so hard to set up.

But what does this give us?

If you squint a bit, you may notice that the end result is actually very similar to what you get if you stick with a pointer based list, but add in a custom allocator that allocates list nodes from a single (contiguous) memory pool. So to some extent, you could argue that you might as well just go ahead and do that instead. But one advantage in the case of 'vector hosting' is that the underlying buffer can actually now be resized dynamically in a vector kind of a way (so extended with amortised cost, and 'shrunk to fit', as desired). And there are other reasons to prefer element indexing to generalised pointers, for example with element indices being easier to load and save.

Just as with the memory pool, we'll see some reduction in memory allocation overhead due to the simpler memory allocation strategy.

And, just as with the memory pool, our list nodes are now all in one contiguous memory space, so we can expect better locality of references, and hopefully some increased performance due to this.

But, unlike std::vector, the hosted list doesn't keep our elements ordered in memory, and iterating through the elements in sequence order can potentially mean jumping around arbitrary positions in the host buffer, which isn't so good.

And those _prevIndex and _nextIndex values are a bit chunky.

In our specific use case at PathEngine, _elementValue is actually going to be just a single integer value, and adding _prevIndex and _nextIndex multiply memory footprint by 3!

Our specific use case is in the implementation of a mesh data structure. In most cases we expect to only edit part of the mesh at a time, we're ok with periodically 'consolidating' mesh data, and we'd like our sequence representation to be as close to a raw vector as possible!

So we're going to implement this a bit differently, but we've seen how straightforward it is to 'host' lists in a vector, and we'll still leverage this idea to some extent.

What we'll do is to implement something pretty close to a raw vector, but with a concept of 'dead entries'.

We basically replace the standard 'copy everything down to make contiguous' deletion semantics from std::vector with a mechanism that just flags elements as dead, on deletion, but leaves these 'dead' elements in place, and reuses them as and when new elements are required.

It turns out that we can do this, in our specific use case, without needing any extra data for flags or _nextIndex, but we'll need to look at the specific requirements for _elementValue in a bit more detail.

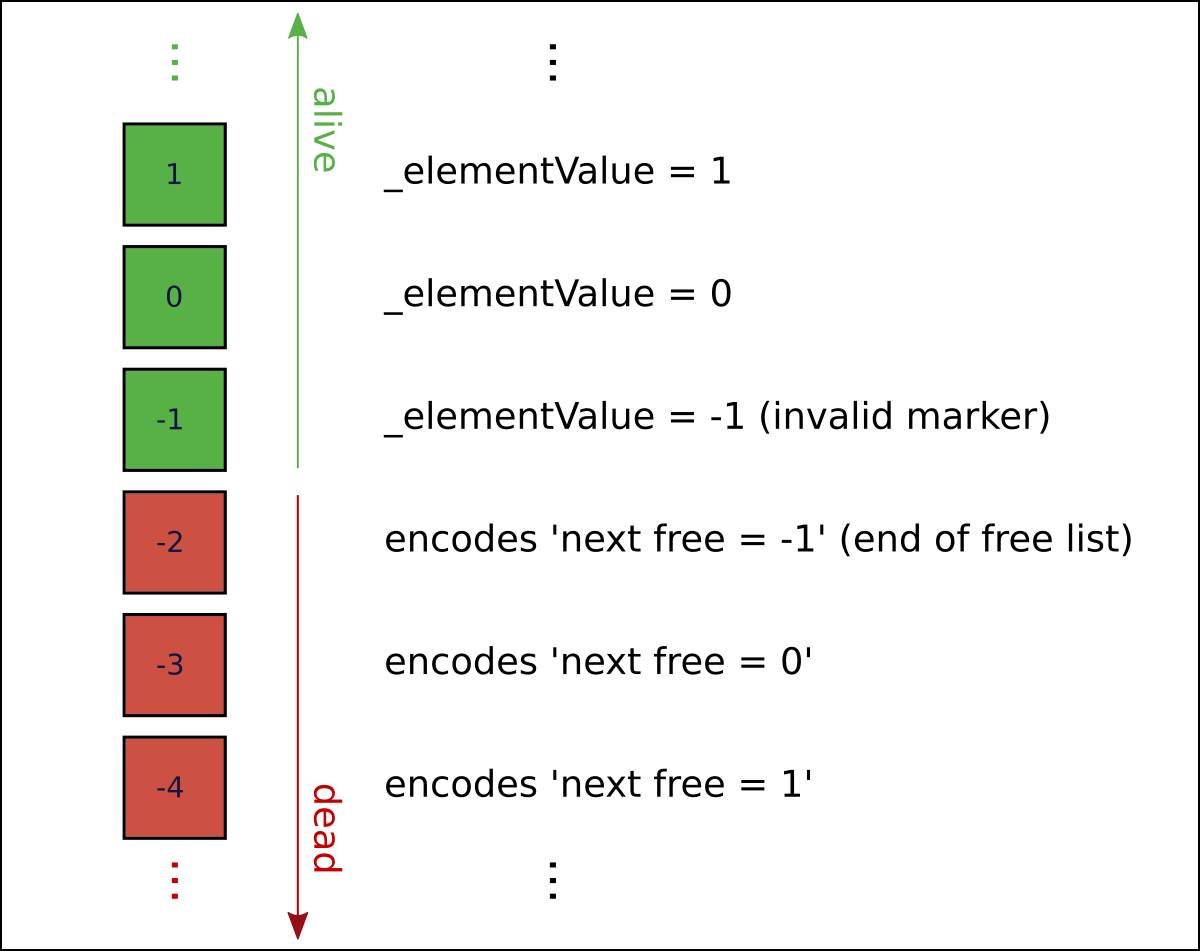

In our mesh use case, element value can either be a positive integer (0 included), or a special value of -1. (-1 is used an a kind of 'invalid value' indicator). This leaves values below -1 available for marking dead entries.

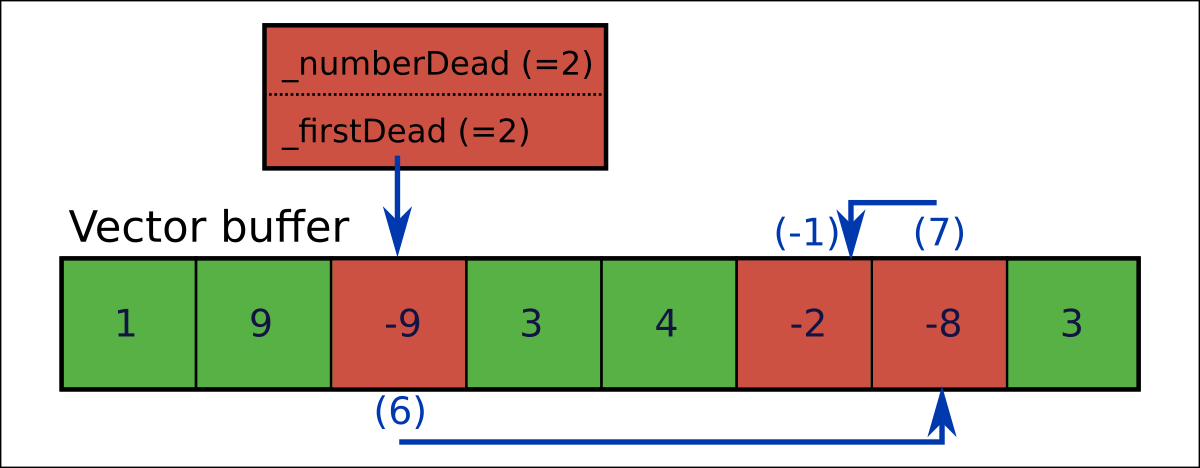

So we define any element with _elementValue set -1 as flagging as a dead entry. But the actual negative value used here can also encode a _nextIndex value for our free list.

The _nextIndex values needing to be encoded include positive integers (0 included), specifying the next free entry, and (another) special case value of -1, which in this case means 'no more free list entries' (so the equivalent of a null pointer).

To encode _nextIndex (an integer greater than or equal to -1) as a dead element marker (an integer less than -1) we use the mapping f(x) = -3 - x. If you check, you'll see that the same function also works for conversion the other way (to obtain _nextIndex back from a dead element marker).

In PathEngine this encoding is actually wrapped up in a 'named integer' class, which helps avoid programmer errors. This adds a lot of lines of code, however, so I'll be stripping the named integer stuff out of the example code, and we'll work with raw integer values directly.

Anyway, we end up with a sequence representation that looks something like this:

This stores the sequence [1,9,3,4,3], but note that, in order to iterate through this sequence, we'll need to check for and skip dead entries as we go.

By keeping track of the number of dead entries we can work out the actual number of contained elements based on the size of the underlying vector.

A stripped down implementation for this is as follows:

#include <cassert> #include <vector> #include <cstdint> class cVectorWithDeadEntries { std::vector<int32_t> _v; int32_t _firstDead; int32_t _numberDead; public: cVectorWithDeadEntries() { _firstDead = -1; _numberDead = 0; } bool empty() const { return _v.empty(); } int32_t size() const { return static_cast<int32_t>(_v.size()); } int32_t addEntry() { int32_t index; if(_firstDead == -1) { index = size(); _v.push_back(-1); } else { --_numberDead; index = _firstDead; _firstDead = -3 - _v[index]; _v[index] = -1; } return index; } void markAsDead(int32_t i) { _v[i] = -3 - _firstDead; _firstDead = i; ++_numberDead; } bool entryIsDead(int32_t i) const { return _v[i] < -1; } int32_t numberDead() const { return _numberDead; } int32_t operator[](int32_t i) const { assert(!entryIsDead(i)); return _v[i]; } void setValue(int32_t i, int32_t value) { assert(!entryIsDead(i)); assert(value >= -1); _v[i] = value; } };

We reuse std::vector here to avoid reimplementing the wheel. (Although, in PathEngine, we actually reuse a custom vector implementation!) In real code, however, this means you'll probably find yourself wanting to add some more pass through methods to access the functionality of that underlying vector (such as different constructor versions, and things like swap(), or an explicit push_back() operation).

Note the explicit setValue() method. We could also, alternatively, return some kind of proxy object from operator[], to allow element values to be set directly through that indexing operator.

If a lot of elements get deleted, we can end up with a lot of dead elements, and then it's nice to be able to free up some of this memory and get back to a contiguous sequence of elements:

void consolidate(std::vector<int32_t>& remaps) { remaps.clear(); remaps.resize(size(), -1); _firstDead = -1; _numberDead = 0; int32_t remapped = 0; for(int32_t i = 0; i != size(); ++i) { if(!entryIsDead(i)) { remaps[i] = remapped; _v[remapped] = _v[i]; ++remapped; } } _v.resize(remapped); }

If you hold on to element references across this call then these element references can be invalidated, but a table of remaps is returned to enable you to update these references.

We'll see a bit more about exactly how cVectorWithDeadEntries is actually used, in PathEngine, in the next post, but it should already be clear that there can be good alternatives to old fashioned linked lists, if you're willing to knock up a bit of custom container code.

Hosting lists in a contiguous buffer can give you some significant advantages, in both cache efficiency and reduction of memory allocation overhead.

Direct element indexing can also be an important additional advantage. cVectorWithDeadEntries effectively gives you random access read and write permissions to the contained sequence, and this may allow you to do some stuff you wouldn't be able to do with a linked list. And element indices are a lot more convenient when making data structures persistent, and can be easily packed into a smaller number of bytes if necessary.

If you're working with linked lists (or some other linked data structure with fixed size nodes), and you need the best possible performance, consider:

switching to a std:vector

hosting your data structure in a vector

building a custom vector hosted container!

Benchmark, and test what is actually fastest in your specific application context!

** This is a repost from upcoder.com, please check the existing comment thread for this post before commenting. **

Read more about:

Featured BlogsYou May Also Like